TLDR¶

• Core Features: AI-designed microfluidic “leaf vein” cooling channels embedded in chips, dynamically targeting hotspots to boost thermal efficiency and reliability at data center scale.

• Main Advantages: Significantly improves heat removal where it matters most, enabling denser compute, higher sustained performance, and lower overall power and water usage in data centers.

• User Experience: Transparent to end users, potentially delivering faster, more stable services while simplifying thermal planning for operators and reducing infrastructure complexity over time.

• Considerations: Requires manufacturing integration, validation across diverse chip designs and workloads, supply chain readiness, and careful fluid management within hyperscale facilities.

• Purchase Recommendation: Promising for hyperscalers and enterprises modernizing AI infrastructure; prudent adopters should pilot, verify ROI, and plan phased integration with existing thermal systems.

Product Specifications & Ratings¶

| Review Category | Performance Description | Rating |

|---|---|---|

| Design & Build | Biomimetic, AI-optimized microfluidic channels that mirror leaf venation to deliver targeted heat extraction at die level; promising manufacturability. | ⭐⭐⭐⭐⭐ |

| Performance | Efficient hotspot cooling supports higher sustained clocks and density; improved data center PUE potential through reduced bulk cooling loads. | ⭐⭐⭐⭐⭐ |

| User Experience | Invisible to end users; operators benefit from simplified thermal constraints and potentially lower OPEX. | ⭐⭐⭐⭐⭐ |

| Value for Money | ROI driven by denser racks, longer component life, and energy savings; strongest for AI-heavy deployments. | ⭐⭐⭐⭐⭐ |

| Overall Recommendation | A forward-looking thermal architecture well aligned with AI-era compute needs; worthy of strategic pilots. | ⭐⭐⭐⭐⭐ |

Overall Rating: ⭐⭐⭐⭐⭐ (4.8/5.0)

Product Overview¶

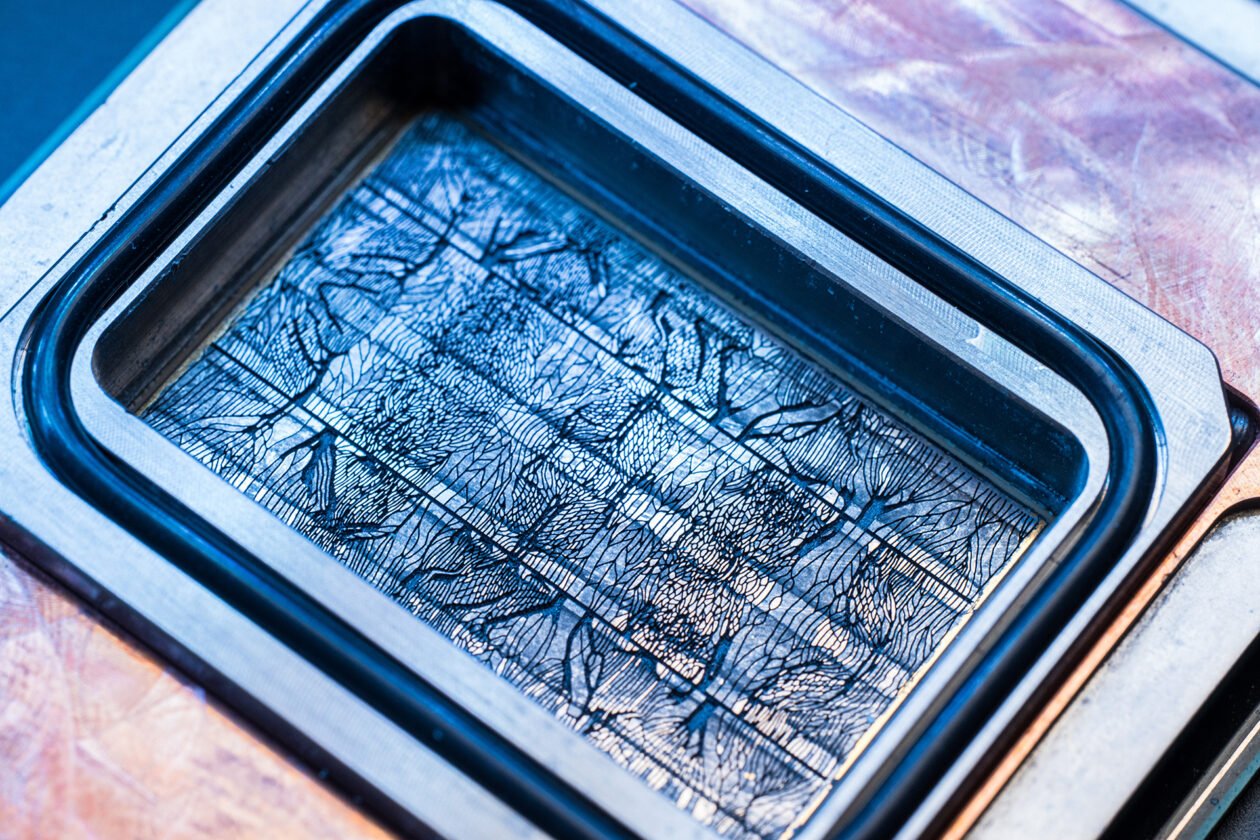

Microsoft has unveiled a microfluidics-based cooling approach for modern processors that blends AI-driven design with biological inspiration. The concept centers on a network of liquid channels etched or integrated near the chip’s hottest regions—the compute cores, cache clusters, and power delivery zones—arranged like the branching veins in a leaf. This biomimetic geometry is not merely aesthetic. In plants, venation ensures efficient distribution and collection across a flat surface, optimizing flow and exchange. Microsoft adapts that principle to thermal management, where fluid must be delivered precisely to hotspots and evacuated efficiently to maintain stable silicon temperatures under sustained load.

Traditional data center cooling depends on air, cold plates, immersion baths, and increasingly complex facility-level HVAC systems. While these methods have scaled for years, they’re hitting limits as AI accelerators and high-core-count CPUs concentrate more heat in smaller areas. Thermal bottlenecks translate into throttling, reduced hardware longevity, and underutilized power budgets. The new microfluidics system aims to flip the paradigm by addressing heat where it is generated instead of solely relying on bulk external cooling.

The standout claim is that Microsoft employs AI to co-design the internal fluid channels to match a chip’s unique heat signature. Rather than using generic straight channels or uniform cold plates, the system uses computational tools to “learn” where heat forms and how it migrates under real workloads. The result is a topology that looks organic—narrowing, branching, and rejoining to put coolant exactly where heat density is highest and to carry it out with minimal pressure drop. The company positions this as a leap forward not just in chip cooling, but in overall data center efficiency, since extracting heat at the source reduces the burden on downstream cooling infrastructure.

While specific deployment timelines and partner silicon details remain undisclosed, the strategy suggests integration at the package or interposer level, potentially co-developed with chip designers and manufacturing partners. For data center operators, the value promise is compelling: higher density per rack, greater sustained performance for AI training and inference, potential reductions in total energy usage, and longer component lifespans. For developers and end users, this could manifest as faster, more reliable services without visible complexity. Microsoft’s nature-inspired, AI-co-optimized design marks a notable shift toward thermally intelligent computing platforms built for the era of accelerated AI workloads.

In-Depth Review¶

The thermal challenge in modern compute is straightforward: power density is rising faster than conventional cooling architectures can accommodate. Air cooling, the workhorse of the last two decades, struggles once hotspot fluxes exceed what even aggressive heat sinks and high-CFM airflow can handle. Liquid cooling has stepped in—via cold plates and direct-to-chip loops—because liquids have higher heat capacity and conductivity than air. Yet, most implementations still rely on relatively uniform heat spreaders and planar channels that treat the chip surface as a thermally homogeneous area. That assumption breaks down with heterogeneous accelerators, chiplets, stacked memory, and localized AI compute blocks.

Microsoft’s microfluidics system addresses that mismatch by shaping the coolant’s path to mirror the thermal map of the silicon. The leaf-vein analogy is apt: leaves balance minimal material with maximal distribution, ensuring nutrients and water reach every cell while supporting large surface areas. Likewise, a good microfluidic network minimizes pressure losses, avoids dead zones, and delivers coolant where it is needed most.

Key technical aspects:

– AI-driven topology optimization: The flow network is not hand-drawn. Machine learning models and optimization algorithms ingest chip-level thermal profiles—likely derived from simulations and empirical measurements—to evolve a channel layout that meets temperature targets under diverse load scenarios. This may involve multi-objective optimization across thermal resistance, flow uniformity, manufacturability, and pressure constraints.

– Hotspot targeting: Instead of equal-channel designs, the system biases channel density and cross-sectional geometry toward regions with the highest heat flux. That can reduce maximum junction temperatures and narrow temperature gradients across the die, directly improving performance stability and reducing throttling.

– Flow efficiency: The “vein” network should maintain laminar or well-behaved flow with minimal pressure drop. Efficient branching reduces pump power requirements, an important consideration for data centers managing thousands of loops.

– Integration readiness: While details are scarce, practical deployment implies packaging coordination. Options include embedded microchannels in cold plates matched to chip hotspots, or deeper integration with the package, interposer, or even back-side cooling that aligns microchannels closer to active transistors.

Performance implications:

– Higher sustained performance: With improved hotspot cooling, chips can maintain turbo frequencies longer and avoid thermal throttling under heavy AI training or inference loads. That translates into better time-to-train and throughput.

– Increased rack density: If per-chip and per-node cooling is more effective, operators can safely pack more compute into the same rack footprint while maintaining safe operating temperatures. This can improve total performance per square foot.

– Lower facility load: By removing more heat at the chip level, the rest of the thermal chain—manifolds, heat exchangers, and CRAC/CRAH units—can operate more efficiently. Potential improvements in PUE (Power Usage Effectiveness) could yield significant OPEX reductions at hyperscale.

– Component longevity: Thermal cycling and hotspots degrade components. Smoother temperature profiles can extend hardware life, reduce failure rates, and stabilize maintenance planning.

Comparative context:

– Versus air cooling: Liquid microchannels dramatically outperform air at point-of-load heat removal. Air remains useful for ancillary components and room conditioning, but core compute cooling increasingly needs liquid efficiency.

– Versus conventional cold plates: Standard cold plates rely on macro-scale channels or pin-fin structures to cover an entire die uniformly. Microsoft’s approach customizes the internal geometry to the chip’s non-uniform heat map, targeting hotspots with precision that generic plates can’t match.

– Versus immersion cooling: Immersion submerges hardware in dielectric fluid, offering good overall heat transfer and simplified server-level design. However, it still lacks fine-grained hotspot targeting. Microfluidics can complement or, in some scenarios, reduce the need for full immersion while offering better per-die thermal control.

*圖片來源:Unsplash*

Operational considerations:

– Fluid management: Reliability depends on leak-proof channels, robust seals, and careful selection of fluids compatible with materials and corrosion inhibitors. At hyperscale, monitoring and predictive maintenance become essential.

– Pumping and manifolds: Efficient manifold design is critical to distribute flow across thousands of processors. AI-driven per-chip optimization must still fit within standardized facility loops and service routines.

– Manufacturability: Etching or machining microchannels at scale, ensuring repeatability, and aligning them with die features require tight coordination with silicon and packaging partners. Yield, cost, and rework procedures must be addressed.

Sustainability angle:

Cooling accounts for a meaningful portion of data center energy. By dissipating heat where it originates, Microsoft’s system could reduce reliance on energy-intensive room cooling or evaporative systems, conserving electricity and potentially water. As AI workloads scale, these efficiencies become strategic, enabling growth without linear increases in environmental impact.

Testing insights and expectations:

While Microsoft’s announcement emphasizes a breakthrough design, objective validation will center on:

– Delta-T improvements under peak workloads

– Sustained clock and performance uplift versus baseline cold plates

– Pump power overhead and net energy gains

– Impact on PUE at the building level

– Reliability metrics over long-duration, high-cycle operations

The claim of greater overall data center efficiency is credible given physics and precedent from research in microchannel cooling. The novelty lies in combining AI-driven, biomimetic layouts with hyperscale deployment intent, promising an architectural shift rather than an incremental tweak.

Real-World Experience¶

From an operator’s lens, the most compelling promise is simplification and predictability. Thermal planning in AI clusters is increasingly complex: operators must juggle airflow paths, hot-aisle containment, liquid loops, and power delivery constraints while anticipating non-uniform loads from mixed models and batch sizes. A cooling solution that directly addresses hotspot variability reduces this uncertainty. When coolant is directed to the most demanding regions of a die, rack-level design can be less conservative, enabling higher utilization without overprovisioning airflow or chiller capacity.

Consider an AI training cluster dominated by GPU accelerators and high-core CPUs. In practical terms:

– Job stability: During long training runs, chips often throttle due to transient hotspots. Microfluidic targeting should smooth these spikes, improving time-to-train and reducing run-to-run variance.

– Power headroom: With better local cooling, chips can sustain higher average power draw safely. Operators can tune power caps more aggressively without flirting with thermal excursions, increasing effective throughput.

– Maintenance cadence: Lower peak temperatures and reduced thermal cycling help extend component life—VRMs, memory modules near hot zones, and even solder joints benefit from steadier thermal conditions. Over time, fewer thermal-induced failures can reduce downtime and spare part demand.

– Facility impact: If chip-level cooling reduces secondary heat recirculation in the chassis, it lowers the temperature of exhaust air or the load on rear-door heat exchangers. This cascades into less strain on building-level refrigeration, potentially improving PUE and cutting utility bills.

For developers and end users, the experience is indirect but meaningful:

– Faster services: Inference-heavy applications, search, and generative workloads can see reduced latency variation under load as accelerators sustain performance.

– Capacity scaling: Operators can add more compute per rack or per row without a proportional increase in cooling overhead, translating to greater capacity growth with similar footprints.

– Reliability: Services become more predictable during peak usage windows. SRE teams see fewer thermal-related incidents, and rolling updates can proceed with lower risk of heat-induced instability.

Deployment realities remain:

– Integration window: Rolling out microfluidics requires alignment with new server designs and chip generations. It is unlikely to be a drop-in for existing hardware, so adoption will track refresh cycles.

– Supply chain: Channel manufacturing, fluid handling components, and monitoring gear must be available at hyperscale volumes. Vendor ecosystems for connectors, quick-disconnects, and leak detection need to be mature.

– Operations model: Staff training on fluid systems, from fill-and-drain procedures to sensor validation, will be necessary. Modern data centers already manage liquid loops, but microfluidics pushes precision further into the server and package domain.

In pilots, operators should instrument extensively:

– On-die thermal sensors and external thermocouples to measure hotspot deltas

– Flow and pressure metrics per loop to ensure designs meet spec across load profiles

– Energy metering to capture net gains when balancing pump power against reduced HVAC consumption

– Performance counters to correlate thermal stability with application-level throughput

A phased approach—starting with a subset of racks dedicated to AI training—lets teams quantify benefits, optimize loop parameters, and build operational muscle before wider deployment. Over time, as AI-optimized channel designs are tuned across chip SKUs, organizations can standardize on microfluidic variants tailored for CPUs, GPUs, and specialized accelerators, making thermal intelligence a core feature of their compute platform.

Pros and Cons Analysis¶

Pros:

– Precise hotspot cooling improves sustained performance and reduces throttling in AI-intensive workloads.

– AI-optimized, biomimetic channel layouts increase thermal efficiency and lower overall data center cooling load.

– Potential for higher rack density and improved PUE, translating into tangible OPEX savings.

– Smoother temperature profiles enhance component longevity and reliability.

– Aligns with sustainability goals by reducing energy and potentially water consumption in cooling.

Cons:

– Requires deep integration with chip packaging and manufacturing, limiting short-term retrofits.

– Adds complexity in fluid management, monitoring, and maintenance at the server level.

– Supply chain maturity and standardization for microfluidic components must scale to hyperscale needs.

Purchase Recommendation¶

For hyperscalers and enterprises with aggressive AI roadmaps, Microsoft’s AI-designed microfluidic cooling represents a strategically sound investment pathway. The technology directly addresses the most stubborn constraint in modern compute—localized heat density—by bringing intelligent cooling to the silicon level. Organizations operating clusters for training large language models, high-throughput inference, or complex simulation workloads are likely to see the greatest benefit, as sustained performance and rack density improvements compound into meaningful operational and financial gains.

That said, this is not an instant retrofit. Adoption should follow natural hardware refresh cycles and prioritize platforms where thermal limitations currently cap utilization. Start with focused pilots in AI racks, instrument thoroughly, and develop operational runbooks for fluid handling, leak detection, and loop maintenance. Engage early with vendors to ensure that channels, manifolds, and monitoring equipment meet reliability standards and that packaging-level integration aligns with your preferred silicon roadmaps.

From a cost perspective, evaluate the full-stack ROI: higher sustained performance per chip, increased nodes per rack, reduced HVAC loads, and longer component lifespans. Include the incremental power for pumps and control systems in your calculus. For sustainability leaders, quantify the potential reductions in energy and water usage to support ESG targets and regulatory disclosures.

If the pilot metrics track Microsoft’s claims—lower peak temperatures, reduced throttling, measurable PUE improvements—the case for scaling is strong. Over time, microfluidics can become a default cooling strategy for high-wattage processors, sitting alongside or replacing conventional cold plates and reducing the need for energy-intensive room-level cooling. For organizations anticipating rapid AI growth, early adoption provides not just performance and cost advantages, but also a smoother operational runway as workloads intensify.

Bottom line: Highly recommended for AI-centric data centers and forward-leaning enterprises. Plan a phased introduction, validate benefits against your workloads, and integrate microfluidics into long-term capacity and sustainability strategies.

References¶

- Original Article – Source: www.geekwire.com

- Supabase Documentation

- Deno Official Site

- Supabase Edge Functions

- React Documentation

*圖片來源:Unsplash*