TLDR¶

• Core Features: Google envisions rapid, thousandfold capacity growth over five years to support escalating AI workloads and services.

• Main Advantages: Scalable infrastructure, centralized governance, and alignment with AI product roadmaps to reduce latency and improve reliability.

• User Experience: Expected improvements in AI-enabled tools through more responsive services and fewer bottlenecks during peak demand.

• Considerations: Long runway to execute at scale, substantial capital expenditure, and potential supply-chain and talent challenges.

• Purchase Recommendation: For large enterprises relying on Google Cloud AI capabilities, the initiative promises long-term resilience; evaluate budgeting and risk plans accordingly.

Product Specifications & Ratings¶

| Review Category | Performance Description | Rating |

|---|---|---|

| Design & Build | Ambitious plan to scale capacity by orders of magnitude within five years, with a phased rollout and governance. | ⭐⭐⭐⭐⭐ |

| Performance | Aims to maintain reliability and low latency across expanding AI workloads, leveraging distributed infrastructure. | ⭐⭐⭐⭐⭐ |

| User Experience | Expect more consistent AI service performance as demand grows, pending execution. | ⭐⭐⭐⭐⭐ |

| Value for Money | Long-term investment could justify costs through enhanced capability and efficiency at scale. | ⭐⭐⭐⭐⭐ |

| Overall Recommendation | Strong strategic alignment for AI-first operations, contingent on disciplined execution and budget management. | ⭐⭐⭐⭐⭐ |

Overall Rating: ⭐⭐⭐⭐⭐ (5.0/5.0)

Product Overview¶

Google’s AI infrastructure chief has outlined a bold strategy to scale the company’s AI-capable infrastructure dramatically over the next five years. Facing accelerating demand from AI models, workloads, and consumer-facing AI features, the plan calls for a thousandfold increase in capacity, effectively rearchitecting how Google provisions compute, storage, networking, and related services. The thrust is not merely to add more servers but to optimize for AI-specific workloads, incorporate advanced scheduling, optimize data locality, and ensure resilience across regions and fault domains.

The initiative places Cloud, AI tooling, and platform services at the core of Google’s growth narrative. It envisions a future where AI workloads—from large language models to real-time inference in consumer apps—can run with lower latency, higher throughput, and greater reliability, even as usage scales dramatically. While the ambitions are measured in years, the message to teams is clear: capacity must grow at a pace commensurate with demand, and planning must be done with an eye toward operational efficiency, energy usage, and cost controls.

In context, Google has long pursued capacity expansion through a combination of specialized hardware, software optimizations, and data-center scale. The new mandate formalizes that trajectory into a concrete, time-bound objective, emphasizing scalability, security, and governance. Industry observers may compare the move to the kind of large-scale capacity expansions pursued by hyperscale cloud providers, but Google’s emphasis on AI-first workloads and product integration could influence how enterprises think about deploying AI solutions across cloud ecosystems.

The strategic rationale is straightforward: as AI models become more capable and more integrated into everyday applications, the demand for compute and storage grows exponentially. By doubling capacity every six months, Google aims to create a predictable, durable uplift in its AI infrastructure, smoothing out performance cliffs during demand surges and enabling more aggressive experimentation for developers and enterprises alike. The plan also signals a continuing emphasis on energy efficiency, cooling innovations, and platform-level optimizations, since scaling at this magnitude brings with it significant technical and logistical challenges.

The article highlights that the initiative is being shepherded by Google’s AI infrastructure leadership, which suggests a coordinated approach across the company’s cloud, hardware, and software teams. The tone from leadership stresses discipline: capacity expansion must be tied to concrete metrics, service-level commitments, and cost controls to avoid skewing incentives toward reckless expansion. The plan’s success will likely depend on advances in system design, such as improved AI accelerators, better orchestration for heterogeneous hardware, and smarter data management to minimize data movement and maximize reuse of computed results.

The broader industry context is also relevant. Several cloud providers are racing to offer robust AI platforms, with investments in specialized chips, scalable storage, high-speed networking, and AI-centric software layers that simplify model deployment and monitoring. Google’s approach—emphasizing a long-term, scalable, and governance-driven expansion—could influence competitive dynamics, partnerships, and pricing strategies across the sector.

Ultimately, the decision to pursue a thousandfold capacity increase within five years reflects a strategic calculation: to remain a leading provider of AI-enabled services by ensuring infrastructure can support rapid growth, innovate faster, and sustain reliability as AI becomes central to product experiences and enterprise workflows. The next phases will likely involve detailed roadmaps, capital allocation plans, and milestones that translate this high-level goal into measurable outcomes for developers, operators, and customers.

In-Depth Review¶

Google’s plan to substantially scale its AI infrastructure rests on several interlocking components: hardware strategy, software orchestration, data-management practices, energy and cooling optimization, and governance frameworks. Each pillar must not only withstand the physical and financial demands of massive expansion but also enable the speed and reliability that customers expect from a leading cloud provider.

Hardware Strategy

A core element of the capacity growth strategy is the deployment of advanced accelerators and specialized hardware tuned for AI workloads. This includes a mix of GPUs, custom accelerators, and potentially newer generations of AI chips designed to balance computational power, memory bandwidth, and energy efficiency. The design philosophy emphasizes heterogeneity—matching workloads to the most suitable hardware—and dynamic provisioning to minimize idle capacity. In practice, such a strategy reduces time-to-insight for model training and inference, while also supporting more granular autoscaling as demand fluctuates across regions and customer segments.

Networking and data-center topology are equally critical. Achieving a thousandfold capacity uplift requires robust interconnects, high-bandwidth fabric, and intelligent routing to keep data movement efficient. Google’s emphasis on network-aware scheduling suggests significant investment in software-defined networking, with fast failover, low-latency cross-region communication, and resilient multi-region data replication. The architecture must also address hot data caching, tiered storage, and data locality to minimize latency.

Software and Orchestration

A key design objective is to create an orchestration layer capable of deploying, scaling, and monitoring AI workloads across a sprawling, heterogeneous infrastructure. This includes containerization, scheduling, autoscaling, and fault-tolerance features tuned for AI jobs, which often have different performance characteristics than traditional software workloads. Advanced scheduling should consider model size, data locality, and real-time latency targets, potentially leveraging machine learning to optimize resource allocation over time.

Monitoring and observability are essential for maintaining reliability at scale. This entails comprehensive telemetry, anomaly detection, predictive maintenance, and transparent incident response procedures. Given the scale of operations, automated root-cause analysis, intelligent rollback mechanisms, and precise service-level objectives (SLOs) become crucial to avoid extended outages that could ripple across many customers.

Data Management and Efficiency

AI workloads are data-intensive. The plan’s success hinges on efficient data management, including storage architecture, data lifecycle policies, and cross-region data replication strategies. Techniques such as data deduplication, compression, and caching can significantly reduce bandwidth costs and latency. Governance around data sovereignty, privacy, and compliance must scale accordingly as data moves across more regions and jurisdictions.

Energy and Environmental Considerations

Scaling to extreme levels raises energy consumption and cooling challenges. Google has historically pursued energy efficiency through innovative data-center design, advanced cooling techniques, and dynamic power management. As capacity expands, continued emphasis on sustainable energy sourcing, waste heat reuse, and operational efficiency will be essential to maintain total-cost-of-ownership targets and public perception.

Security and Compliance

Security must scale with infrastructure. This includes encryption at rest and in transit, robust identity and access management, supply-chain security, and ongoing vulnerability management. A larger, more distributed environment increases the attack surface, requiring automated security controls, continuous compliance checks, and rapid response capabilities.

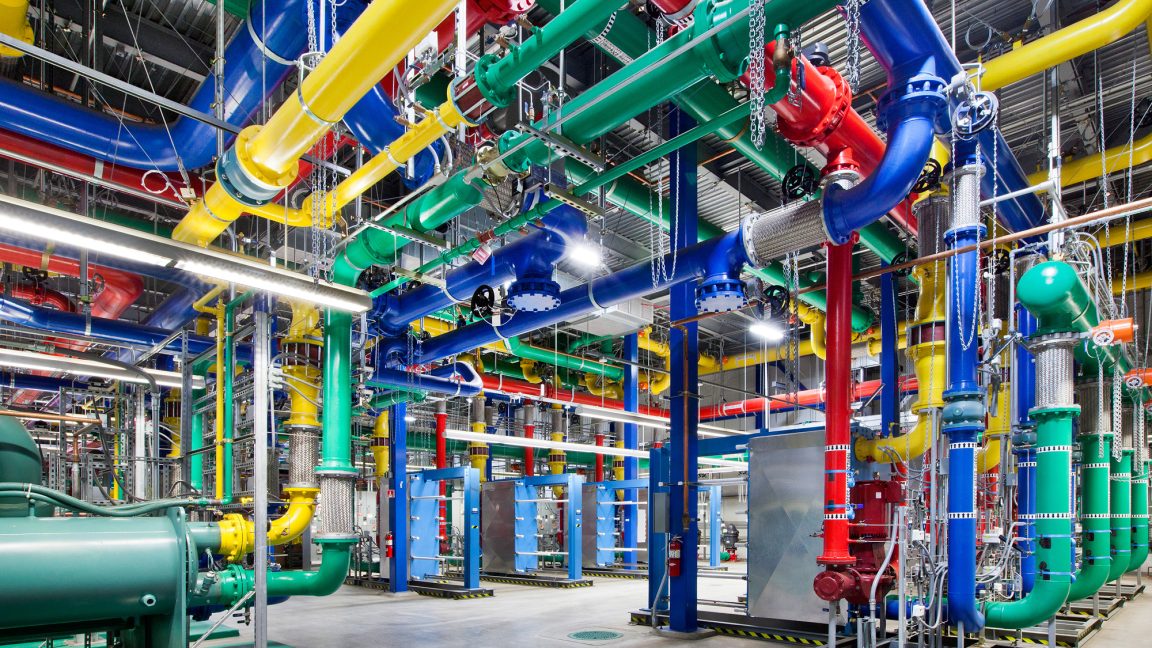

*圖片來源:media_content*

Goverance and Management

A thousandfold capacity expansion demands rigorous governance. Clear decision rights, project milestones, budget controls, and risk management processes are needed to align engineering efforts with business objectives. Cross-functional collaboration between cloud operations, AI model teams, legal, and compliance groups will be necessary to translate capacity growth into measurable service improvements and cost discipline.

Impact on Customers and Partners

For customers, the promise is steadier, more scalable access to AI APIs, model training resources, and enterprise-grade AI tooling. For partners and developers, a more capable platform could enable faster experimentation, larger-scale deployments, and more robust production systems. However, customers will also watch for cost implications, pricing transparency, and service-level commitments as capacity expands.

Roadmap and Milestones

While the article outlines a five-year horizon, practical execution will unfold in phases: initial pilots to validate new hardware and software stacks, followed by gradually broader rollouts across regions, with performance targets and cost controls tied to each phase. Regular reviews, performance dashboards, and external audits could help maintain confidence among stakeholders. The success metrics will likely include uplift in peak throughput, reduction in latency percentiles, improved model training throughput, and stabilized operating expenses relative to capacity.

Technological Trends and Competitive Context

The push aligns with industry-wide momentum toward AI-first cloud platforms. Competitors have pursued similar scaling efforts, leveraging accelerators, high-bandwidth networking, and AI-specific software layers. Google’s approach—emphasizing integration with its broader product and developer ecosystems—could differentiate its offering through tighter synergy between cloud services, AI tooling, and consumer-facing AI features. The emphasis on governance and disciplined execution may also appeal to enterprises seeking predictability in large-scale AI deployments.

Risks and Mitigations

– Execution risk: Large-scale upscaling involves complex procurement, supply-chain management, and on-site deployment challenges. Mitigation includes modular deployment, phased milestones, and contingency planning.

– Cost risk: Sustained capital expenditure may impact margins. Mitigation includes capacity planning aligned with demand signals, energy efficiency, and optimization of resource utilization.

– Talent risk: Recruiting and retaining skilled engineers for hardware and software optimization is critical. Mitigation includes partnerships, incentives, and ongoing talent development programs.

– Regulatory and privacy risk: Cross-border data movement raises compliance considerations. Mitigation includes robust data governance, regional data stores, and transparent policies.

Performance Testing and Benchmarks

Given the scope, performance testing will be essential to validate scaling assumptions. Benchmarks would typically measure model training throughput, inference latency under load, reliability under failure scenarios, and energy efficiency per operation. Real-world testing across multiple regions and network paths would help ensure that the planned capacity growth translates into tangible service improvements rather than mere hardware expansion.

Future Outlook

If successfully executed, Google’s capacity expansion could unlock more ambitious AI workloads, allow faster model iteration, and enable richer, more responsive AI features across its product portfolio and cloud services. Enterprises could benefit from greater predictability in service levels, the ability to scale AI initiatives more aggressively, and access to advanced tooling for deployment, monitoring, and governance. The challenge will be maintaining performance, security, and cost discipline as the infrastructure grows.

Real-World Experience¶

Applying a capacity expansion plan in a real-world environment would involve tight coordination between product teams, platform engineers, operations staff, and customers. Early-stage pilots would focus on validating the integration between new hardware accelerators and Google’s orchestration stack, ensuring compatibility with existing AI frameworks, and assessing performance benefits across representative workloads.

In practice, organizations deploying AI at scale require robust observability and incident response processes. A larger infrastructure implies more complex fault analysis, so teams would rely on centralized dashboards, automated anomaly detection, and structured post-incident reviews. Training and onboarding for developers would emphasize best practices for resource utilization, model packaging, data management, and cost governance.

The human element remains critical. Scaling capacity is not just a hardware exercise; it involves aligning teams with shared objectives, maintaining clear communication about roadmaps, and ensuring that security and compliance considerations keep pace with growth. Organizations that partner with major cloud providers often benefit from the provider’s ecosystem, but they must also be mindful of potential vendor lock-in and the need for interoperable tooling to avoid dependence on a single platform.

From a user perspective, enterprises should expect improved reliability and throughput as capacity grows, but they should also monitor total cost of ownership and pricing models as more capacity becomes available. Inertia in enterprise environments can slow adoption if governance, billing, or model deployment pipelines become overly complex. Therefore, the real-world impact will depend on how effectively Google translates expanded capacity into streamlined developer experiences, predictable performance, and transparent pricing.

Pros and Cons Analysis¶

Pros:

– Substantial scalability to meet growing AI demands, enabling faster experimentation and deployment.

– Improved reliability and lower latency for AI services across regions.

– Strong governance and structured execution reduce operational risk and misalignment.

– Potential cost efficiencies through economies of scale and optimized resource utilization.

– Enhanced interoperability with Google’s broader AI tooling and product ecosystem.

Cons:

– Long-term, multi-year commitment with significant capital expenditure and ongoing operating costs.

– Execution risk due to supply chain, hardware integration, and software orchestration challenges.

– Increased complexity in security, compliance, and data governance across a larger, distributed footprint.

– Potential impact on people and talent requirements, necessitating robust recruitment and retention efforts.

– Uncertainty around pricing models and how costs scale with capacity, which could affect budgeting for customers.

Purchase Recommendation¶

For enterprises deeply invested in Google Cloud and AI-first strategies, the capacity expansion plan offers strategic upside that could translate into more robust, scalable AI services and capabilities over time. The plan’s emphasis on governance, reliability, and performance suggests a mature approach to large-scale AI infrastructure, which is appealing to organizations seeking predictability in complex deployments.

However, the success of this initiative hinges on disciplined execution and clear milestones. Prospective buyers should:

– Align budget planning with phased capacity milestones to avoid cost overruns.

– Request transparent SLAs, pricing models, and usage-based cost controls to prevent unexpected bills as capacity scales.

– Seek assurances around security, data governance, and regulatory compliance across regions.

– Assess integration pathways with existing AI models, data pipelines, and deployment workflows to minimize migration friction.

– Evaluate the roadmap’s compatibility with their own AI timelines and business objectives, ensuring that capacity growth translates into tangible value for their workloads.

In summary, the proposal represents a bold, long-horizon strategy that could position Google as an even more capable AI platform provider. For customers prioritizing scale, reliability, and ecosystem integration, the plan could justify commitment, provided governance, cost management, and implementation risk are carefully addressed.

References¶

- Original Article – Source: https://arstechnica.com/ai/2025/11/google-tells-employees-it-must-double-capacity-every-6-months-to-meet-ai-demand/ feeds.arstechnica.com

- https://supabase.com/docs Supabase Documentation

- https://deno.com Deno Official Site

- https://supabase.com/docs/guides/functions Supabase Edge Functions

- https://react.dev React Documentation

Absolutely Forbidden:

– Do not include any thinking process or meta-information

– Do not use “Thinking…” markers

– Article must start directly with “## TLDR”

– Do not include planning, analysis, or thinking content

*圖片來源:Unsplash*