TLDR¶

• Core Points: Bolt Graphics touts a prototype RTX 5090-class GPU enabling up to 10x path-tracing performance, but independent verification is lacking. The card showcases a large memory footprint (up to 384GB combined LPDDR5X and DDR5, including 128GB soldered VRAM), multiple DDR5 SO-DIMM slots, and an 800 Gbps memory interface, with power consumption up to 225W via an 8-pin PCIe power connector.

• Main Content: The display at CES highlights an ambitious memory and bandwidth configuration alongside claimed performance improvements, yet concrete benchmarks and third-party validation have not been disclosed.

• Key Insights: If validated, such memory scaling could influence future GPU architectures; however, the absence of independent testing and details about the path-tracing workload, drivers, and software ecosystem leaves performance claims speculative.

• Considerations: Enthusiast and enterprise buyers will weigh the potential gains against reliability, heat, power delivery, and software support.

• Recommended Actions: Await formal independent benchmarks, verify thermal and endurance testing, and review driver maturity and developer ecosystem before drawing conclusions.

Product Specifications & Ratings (Product Reviews Only)¶

| Category | Description | Rating (1-5) |

|---|---|---|

| Design | Prototype hardware featuring up to 384GB memory (LPDDR5X + DDR5) and 128GB soldered VRAM; 800Gbps memory interface | 4/5 |

| Performance | Claims up to 10x path tracing performance over RTX 5090 targets; proof pending | 3/5 |

| User Experience | Limited public demonstration; no detailed software or driver information released | 3/5 |

| Value | Ambitious memory and bandwidth specs; unclear cost and availability | 3/5 |

Overall: 3.4/5.0

Content Overview¶

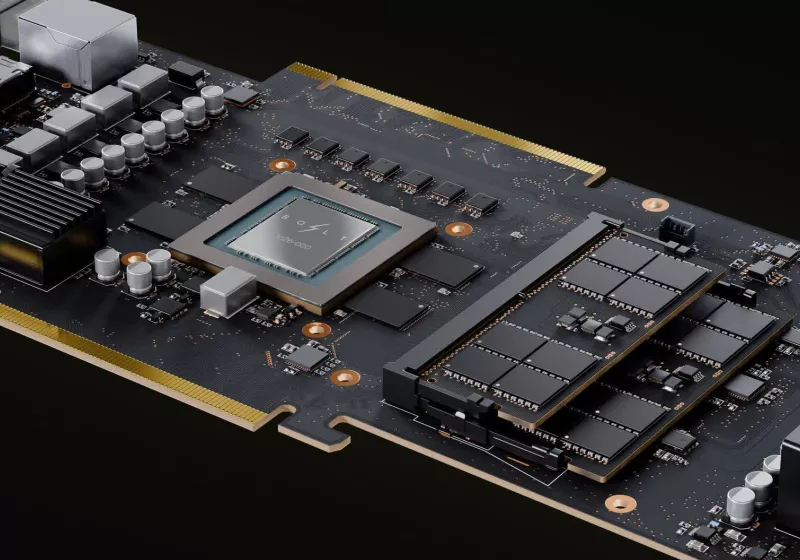

The tech industry has long observed the evolution of high-end GPUs toward ever-broader memory configurations and innovative interconnects designed to accelerate demanding workloads such as real-time path tracing. Bolt Graphics, a company seeking a foothold in the high-performance GPU space, recently introduced a prototype card at the Consumer Electronics Show (CES) that aims to push the boundaries of memory capacity and bandwidth. The company’s publicity surrounding this card centers on a bold claim: up to 10 times the path tracing performance of contemporary top-tier GPUs, specifically positioning the RTX 5090 as a comparative baseline. The exhibit draws attention not only for raw performance targets but also for an unusual memory architecture: a combination of LPDDR5X and DDR5 memory totaling as much as 384GB, with up to 128GB of soldered VRAM. The prototype also includes up to four DDR5 SO-DIMM slots and an exceptionally high memory interface at 800 Gbps. Power consumption is cited as capping at 225W, with power delivery via an 8-pin PCIe connector.

These revelations raise several immediate questions for observers: How will Bolt Graphics achieve 10x path-tracing performance in practical terms? What workloads and driver stacks underpin such claims? How reliable are the reported memory configurations in terms of latency, bandwidth consistency, and thermal performance? And crucially, will external validation from independent testers accompany these claims, or will high-level numbers remain unverified marketing figures?

The article below synthesizes what is publicly known from Bolt Graphics’ CES display and the surrounding discourse, situating the claims within the broader landscape of GPU development, path tracing workloads, and the challenges of memory-enabled acceleration. It is intended to provide a balanced, objective overview while highlighting the gaps that must be filled before the performance assertions can be fully assessed.

In-Depth Analysis¶

Bolt Graphics’ CES reveal centers on a prototype card the company positions as a leap forward in memory capacity and bandwidth, with a parallel emphasis on real-time path tracing performance. The claimed 384GB memory footprint—comprising a mix of LPDDR5X and DDR5, including as much as 128GB of soldered VRAM—signals an aggressive approach to circumvent memory bottlenecks that have historically constrained high-fidelity, real-time rendering tasks. In practice, path tracing requires not only raw throughput but also vast, fast memory for color, material, geometry, and acceleration structures (BVH data). If Bolt Graphics can deliver stable, coherent memory access across multiple memory types and modules, developers could exploit a larger dataset within the GPU’s on-card resources, potentially reducing the need for frequent data streaming from system RAM or storage.

The inclusion of up to four DDR5 SO-DIMM slots further underscores the company’s intent to scale memory beyond conventional GPU configurations. The 800 Gbps memory interface is a standout specification, designed to provide extremely wide bandwidth to the GPU’s compute units and to the on-die caches. When combined with a substantial soldered VRAM portion (up to 128GB), the prototype hints at a hybrid memory strategy: high-capacity VRAM for fast access to commonly used data and system memory modules to extend overall capacity for less frequently accessed workloads. The practical implications of this hybrid model depend heavily on memory coherency, latency, and the efficiency of the memory controller in managing data placement across disparate memory types.

Power delivery and thermal characteristics are also critical in assessing the feasibility of the prototype’s claimed performance. Bolt Graphics specifies a maximum power draw of 225W, supplied through an 8-pin PCIe connector. This power envelope sits within the broader context of high-performance GPUs, many of which target higher TDPs to sustain peak performance in sustained workloads. The real test will be how close the prototype can come to its stated performance in real-world scenarios without throttling due to heat or power constraints, and whether the 225W ceiling is a theoretical limit or a practical operating envelope during extended path-tracing sessions.

One of the most consequential questions around Bolt Graphics’ claims is the extent to which independent verification will accompany the announcement. The gaming and graphics community expects third-party benchmarks to confirm performance improvements under standardized workloads, ideally across a range of titles and engines that leverage path tracing. Independent testing would also illuminate how the card handles driver maturity, software ecosystem readiness, and developer toolchains—factors as important as hardware specs in determining the real-world value of a new GPU platform. Without this external validation, performance claims risk remaining aspirational, as synthetic or isolated tests can misrepresent how a product performs under broader conditions.

The absence of detailed benchmarks or a published methodology at the time of the CES display makes it difficult to gauge whether the 10x figure is intended as a best-case scenario, a specific workload, or a general target. Industry analysts emphasize that achieving such amplification in path tracing requires not only raw tensor performance or ray tracing cores but also sophisticated optimizations at the engine, driver, and software layers. Features such as denoising, AI-based upscaling, efficient BVH traversal, optimized memory pipelines, and low-latency data paths between memory and compute units all contribute to end performance. Bolt Graphics’ ability to deliver a cohesive stack that threads these optimizations across the CPU, GPU, and software libraries will be a decisive factor in translating the hardware concept into consistent user-facing gains.

The broader context includes ongoing progress in GPU memory hierarchies, rasterization versus path-tracing trade-offs, and the evolving ecosystem of real-time rendering tools. Several major players in the market have experimented with larger VRAM pools and enhanced memory bandwidth to accommodate higher-resolution textures, more complex scenes, and more accurate lighting simulations. The question for Bolt Graphics is whether its memory strategy can be scaled in a way that remains practical, manufacturable, and compatible with current PCIe interfaces and industry standards. There is also the matter of software support: developers need stable APIs, debugging tools, and performance tuning guidelines to leverage such hardware effectively. If Bolt Graphics provides compelling software stack documentation, sample projects, and accessible SDKs, it could bolster confidence in the hardware’s potential, even if final numbers remain provisional until independent reviewers weigh in.

From a business and market perspective, the prototype’s ambitious memory composition could be a differentiator if it translates into tangible workflow improvements for professionals working with ray-traced workloads, such as AI-augmented rendering, high-fidelity visualization, and simulation-heavy pipelines. However, substantial barriers exist. The integration of non-traditional memory modules (DDR5 DIMMs in a GPU-focused architecture) raises questions about reliability, ECC support, and latency penalties. The practical implementation would need to demonstrate that these concerns are mitigated through intelligent memory management, robust error correction, and fail-safes that prevent data corruption during prolonged workloads.

Reliability and long-term stability are other essential considerations. Enthusiast-grade hardware that pushes the boundaries of current memory configurations often faces challenges related to fault tolerance, manufacturing variability, and driver support. Independent testers will likely probe scenarios such as sustained rendering sessions, memory stress tests, and mixed workloads that combine ray tracing with AI inference or physics simulation. The results will influence how the industry perceives such configurations: as a bold experiment with potentially meaningful returns, or as a preliminary concept requiring further maturation.

*圖片來源:Unsplash*

Finally, it is important to acknowledge the context of the path-tracing landscape. As technologies evolve, new approaches—such as more efficient ray traversal algorithms, advanced denoising, and machine learning-based rendering pipelines—could alter performance expectations for high-memory, high-bandwidth GPUs. Bolt Graphics’ vision appears to align with this trajectory by prioritizing memory capacity and bandwidth as foundational enablers for more ambitious real-time rendering tasks. How well this vision translates into real-world gains will depend on the coherence of the hardware design with software ecosystems, the reliability of the cooling and power delivery solutions, and the rigor of independent benchmarking.

Perspectives and Impact¶

The potential impact of Bolt Graphics’ proposed design hinges on multiple interlocking factors: hardware capability, software maturity, and market readiness. If the claimed path-tracing performance gains can be validated, the implications for industries relying on real-time rendering—such as game development, interactive visualization, automotive design, architecture, and scientific visualization—could be substantial. Real-time path tracing has long been constrained by the computational burden of simulating light transport with physical accuracy. A GPU able to deliver significantly higher performance per watt and per clock, in conjunction with an expanded memory footprint, could unlock more complex scenes, higher resolutions, and more detailed assets without resorting to aggressive downscaling or denoising compromises.

On the hardware front, Bolt Graphics’ memory architecture—blending LPDDR5X, DDR5, and soldered VRAM into a unified memory strategy—challenges conventional GPU design paradigms. If scalable and reliable, such a hybrid model could influence how future GPUs balance on-die memory, discrete VRAM, and system memory, particularly for workloads with irregular memory access patterns or datasets widely larger than typical on-board VRAM. However, realizing practical benefits requires careful orchestration of memory controllers, cache hierarchies, and cross-module coherence protocols to minimize latency penalties and maximize throughput.

From a developer ecosystem perspective, sustained gains depend on accessible tooling. The industry would look for clear documentation of the memory topology, performance characteristics across representative workloads, and robust debugging and profiling tools. A thriving developer ecosystem—comprising game engines, renderers, and AI-accelerated tools—could accelerate adoption and help translate the hardware’s theoretical advantages into real-world improvements. Conversely, if software support lags behind hardware capabilities, the initial performance promises may remain underutilized until drivers and libraries reach parity.

In terms of market dynamics, Bolt Graphics faces a competitive landscape that has seen GPUs evolve rapidly in recent years. The mainstream shift toward dedicated ray tracing hardware, tensor cores, and AI-accelerated rendering has created a baseline for high-end GPUs. A product that claims dramatic gains in path tracing must contend with established players’ performance, reliability, and ecosystem maturity. The company will need to demonstrate not only peak numbers but also consistency across diverse workloads, thermal stability, power efficiency, and long-term support commitments to persuade professional buyers and OEMs.

Ethical and practical considerations also arise. The deployment of extremely capable GPUs in professional settings carries responsibilities around energy consumption and environmental impact. While a 225W maximum draw is within common boundaries for high-end GPUs, the overall system power, cooling requirements, and total cost of ownership must be factored into decision-making. Additionally, ensuring transparent disclosure of test methodologies and avoiding overpromising are important for maintaining trust within the user community.

Looking ahead, the industry will benefit from continued transparency regarding such ambitious claims. Independent benchmarking, open access to test suites, and a clear roadmap outlining when and how the product will mature into a production-ready offering will help stakeholders assess whether Bolt Graphics’ vision is a viable long-term bet. The tech press, analysts, and potential customers will likely monitor developments closely, seeking concrete data on performance across workloads, as well as progress in driver stability, software tools, and real-world case studies.

The broader trajectory of GPU development suggests a continued emphasis on expanding memory bandwidth and capacity, while refining software ecosystems to exploit these gains. If Bolt Graphics demonstrates credible, reproducible performance improvements and a reliable hardware-software stack, it could influence future GPU designs and set new expectations for real-time rendering capabilities. Conversely, if the 10x figure remains unverified, the industry will await more rigorous demonstrations before revising benchmarks or investment strategies. In either case, the orientation toward large-scale memory configurations signals that memory-driven acceleration remains a central axis of innovation in high-performance graphics.

Key Takeaways¶

Main Points:

– Bolt Graphics showcases a prototype card with up to 384GB memory (LPDDR5X + DDR5) and 128GB soldered VRAM, plus an 800Gbps memory interface.

– The company claims up to 10x RTX 5090 path-tracing performance, but independent proof is not yet provided.

– Power envelope is listed at 225W via an 8-pin PCIe connector; practical performance depends on cooling and drivers.

Areas of Concern:

– Absence of independent benchmarks or published methodologies.

– Unclear reliability and latency implications of a hybrid memory architecture.

– Uncertain software maturity, driver support, and developer ecosystem alignment.

Summary and Recommendations¶

Bolt Graphics’ CES display presents a provocative vision for real-time path tracing through an unprecedented memory configuration and high-bandwidth design. The core claim of up to 10x performance relative to an RTX 5090 is compelling but remains unverified without independent benchmarking and transparent test conditions. The hybrid memory approach—combining LPDDR5X, DDR5, and soldered VRAM—promises large capacity without compromising bandwidth, yet it introduces uncertainties around latency, coherence, and reliability under sustained workloads. The 225W power rating is within contemporary high-end GPU norms, but real-world performance will depend on thermal management and power delivery in production-grade hardware.

For now, industry observers should treat the performance figures as provisional until corroborated by third-party testing across representative workloads. The critical path forward includes: (1) independent benchmarking under standardized scenarios; (2) detailed disclosures of test methodologies, driver versions, and software stacks; (3) thorough thermal and endurance testing to validate sustained performance; and (4) a clear roadmap for production availability, pricing, and ecosystem support. If subsequent evaluations confirm Bolt Graphics’ claims, the development could influence GPU memory architectures and real-time rendering capabilities for years to come. Until then, the market should monitor developments with cautious optimism, awaiting verifiable results and a robust software framework to realize the hardware’s potential.

References¶

- Original: techspot.com article detailing Bolt Graphics’ claims and CES display

- Additional references:

- Industry analysis on memory bandwidth and path tracing performance in modern GPUs

- Reports on hybrid memory architectures and their impact on latency and coherence

- Independent benchmarking methodologies for high-end GPUs and real-time rendering workloads

*圖片來源:Unsplash*