TLDR¶

• Core Points: Microsoft CEO Satya Nadella reframes AI leadership as guiding “infinite minds,” building on classic tech metaphors seen at Davos.

• Main Content: Nadella shared a forward-looking metaphor at the World Economic Forum, positioning humans as stewards of collaborative, expansive AI cognition.

• Key Insights: The shift emphasizes human-AI collaboration, governance, and ethical stewardship in a rapidly scaling digital landscape.

• Considerations: Implications for workforce upskilling, policy, and accountability structures; potential concerns over control and transparency.

• Recommended Actions: Companies should invest in responsible AI practices, continuous learning, and cross-sector dialogue to align innovation with societal values.

Content Overview¶

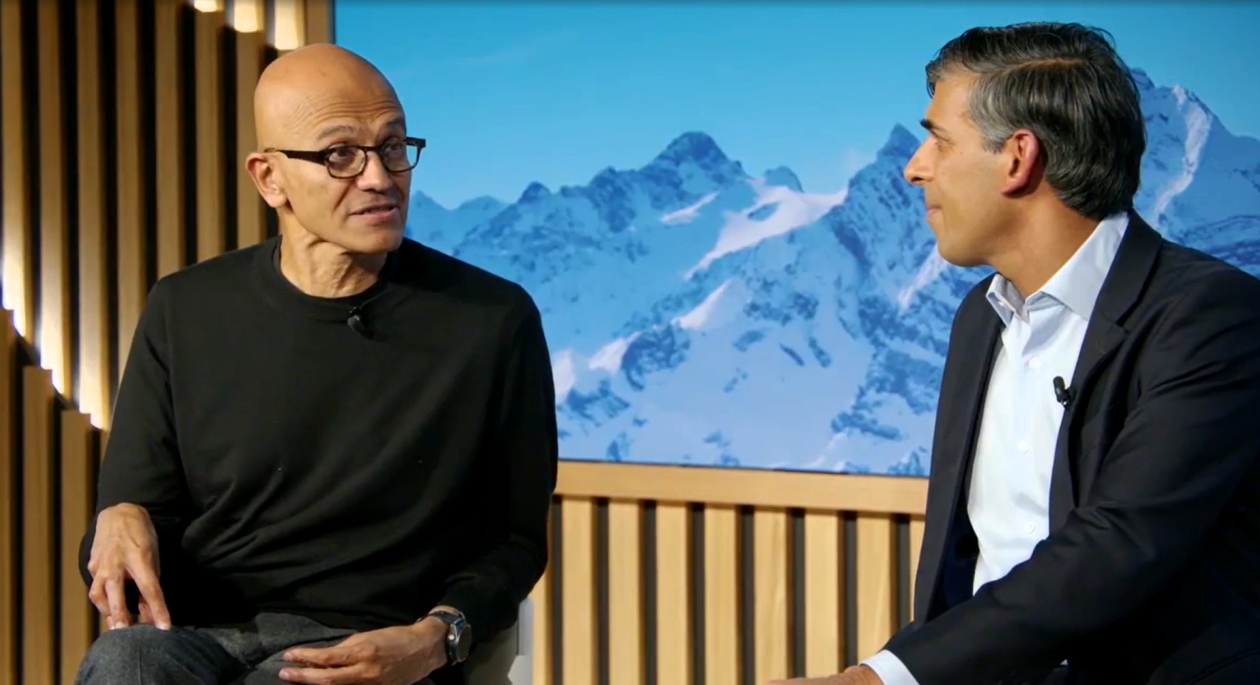

At the World Economic Forum in Davos, Switzerland, Microsoft CEO Satya Nadella delivered a keynote that continued his broad thematic arc about artificial intelligence transforming society. Nadella drew on familiar historical tech aphorisms while introducing his own provocative metaphor for the AI era: humanity as “managers of infinite minds.” The phrase suggests a future in which human operators, policymakers, developers, and end users collaborate with AI systems that can synthesize vast streams of information, reason through complex problems, and generate insights beyond any single human or conventional software. The metaphor resonates with Nadella’s recent emphasis on responsible AI, ethical governance, and the centrality of human judgment in an increasingly automated world. Here, we examine the context of his remarks, the logic behind his metaphor, and the potential implications for businesses, workers, and public policy.

Nadella’s Davos remarks come at a moment when AI is moving from theoretical potential to practical, pervasive deployment across industries. The Microsoft chief has consistently framed AI as a tool that augments human capabilities rather than replaces them, provided that governance, transparency, and accountability keep pace with technical advances. In Davos, he revisited well-known lines about computing progress—imagery that tech leaders have used for decades to describe milestones in software, cloud computing, and intelligent systems—and positioned his new metaphor as a natural extension of that lineage. The “managers of infinite minds” concept is not a single program or product; it is a mental model for navigating an era in which AI can coordinate large networks of data, agents, and decision-makers while requiring careful stewardship from humans.

The Davos audience, which includes business leaders, policymakers, and researchers, received Nadella’s framing as both aspirational and practical. It places responsibility on organizations to design AI with human values at the center and to build infrastructures that enable safe experimentation, continuous learning, and broad access to AI benefits. The broader aim is to balance innovation with governance, ensuring that AI augments human decision-making rather than diminishing accountability or shifting power too far from people to machines.

In the broader arc of Nadella’s leadership, the “infinite minds” metaphor aligns with Microsoft’s broader AI strategy: a platform approach that integrates large-scale AI models with cloud infrastructure, developer tools, and enterprise-grade safeguards. Nadella has repeatedly called for responsible AI, emphasizing fairness, reliability, safety, privacy, and inclusiveness. Davos provided a stage to articulate how those values translate into a practical, forward-looking worldview for corporate strategy, policy engagement, and societal impact. The metaphor also invites consideration of how organizations train, supervise, and govern AI systems, and how workers can adapt to a future in which AI-enabled insights are a routine part of decision-making.

As the AI landscape evolves, Nadella’s metaphor points to several core themes: the importance of human oversight in AI-driven processes; the need for scalable governance frameworks that can address risk while supporting innovation; and the imperative to ensure that AI’s benefits are broadly shared. By presenting humans as “managers” rather than mere operators or consumers of AI, Nadella underscores that meaningful progress will come from deliberate design choices, collaborative experimentation, and ongoing dialogue among technologists, business leaders, and the public.

This reframing also underscores the practical steps that organizations can take in the near term. These include investing in responsible AI practices, enhancing transparency around AI systems, and building training programs that prepare the workforce for collaboration with intelligent technologies. It also calls for policymakers to develop regulatory and ethical standards that reflect the rapid capabilities of AI while protecting fundamental rights. Taken together, Nadella’s message at Davos signals a continuing shift toward a governance-centric view of AI development, one that seeks to harness exponential computational power without sacrificing human values or accountability.

In sum, Nadella’s new metaphor for the AI age—“managers of infinite minds”—is both aspirational and actionable. It frames AI as a catalyst for more powerful, collaborative decision-making while insisting that human judgment, governance, and ethics remain foundational. As AI systems scale and integrate more deeply into organizational and societal structures, this metaphor offers a lens for aligning innovation with responsibility. The coming years will reveal how accurately the metaphor maps onto real-world practice, but its emphasis on stewardship, collaboration, and inclusive progress presents a clear direction for leadership in an era defined by artificial intelligence’s expanding reach.

In-Depth Analysis¶

The centerpiece of Nadella’s Davos appearance was less a product pitch and more a conceptual roadmap. By invoking familiar milestones in computing history—such as the shift from mainframes to personal computers, and subsequently to cloud-based platforms—Nadella situates today’s AI developments within a continuum of human–machine collaboration. The new metaphor—humans as “managers of infinite minds”—adds a layer of responsibility to that continuum, suggesting that the most consequential work in AI will occur at the intersection of capability, governance, and ethics.

From a strategic perspective, Microsoft’s approach to AI centers on building a scalable platform that can support diverse workloads while embedding safeguards and governance mechanisms. Nadella’s language reflects a belief that AI will not be a single technology but a distributed system of models, tools, and services that work in concert with human decision-makers. In this framework, the human role is not diminished but redefined: humans supervise, curate, and direct AI processes, ensuring alignment with organizational objectives and societal norms.

This reframing also responds to persistent concerns about AI risk. Critics have warned about opacity in model behavior, biases embedded in data, and the potential for automation to displace workers. Nadella’s emphasis on “infinite minds” implicitly acknowledges these challenges and foregrounds the need for transparency and accountability. The “manager” role implies active governance: setting goals, monitoring outputs, understanding limitations, and intervening when models behave unexpectedly. It also signals the importance of explainability and auditability as the AI landscape becomes more complex and capable.

On the technical front, the metaphor hints at a future in which AI systems can connect disparate data sources, synthesize insights across domains, and assist with decision-making at speed and scale. This requires robust data governance, interoperable platforms, and ethical safeguards that can keep up with rapid experimentation. Microsoft’s investments in hybrid cloud, AI tooling, and enterprise-ready safeguards align with this vision. Nadella’s framing suggests that success will come not merely from technical prowess but from the ability to orchestrate a diverse ecosystem of AI agents, developers, and end users in a way that maintains trust and accountability.

The Davos setting—an annual gathering of global leaders—adds a policy dimension to the conversation. Nadella’s metaphor implicitly invites dialogue about regulatory design, workforce transition, and cross-border collaboration on AI standards. If AI is to serve society broadly, as he implies, then governance frameworks must accommodate innovation while guarding against misuse. The metaphor thus functions as a call to action for policymakers to work with industry to establish frameworks that support safe experimentation, responsible deployment, and equitable access to AI benefits.

In interpreting Nadella’s metaphor, it’s important to distinguish between aspirational language and practical implementation. The “managers of infinite minds” idea is intentionally expansive, designed to capture the imagination of leaders across sectors. Translating it into concrete practices requires a portfolio of initiatives: ethics-by-design processes, continuous monitoring and red-teaming of AI systems, diverse data inputs to mitigate bias, and workforce development programs that enable employees to work effectively with AI tools. It also necessitates investment in explainability, model governance, and standards-aligned procurement to ensure that AI solutions meet organizational and societal expectations.

From a human-centered perspective, Nadella’s framing honors the enduring value of human judgment. Even as AI systems scale, the need for domain expertise, contextual understanding, and ethical discernment remains critical. The metaphor suggests that AI will increasingly handle cognitive tasks at scale, while humans will focus on framing problems, interpreting results, and applying insights in ways that reflect shared values. This division of labor could help mitigate some risks associated with automation while elevating the strategic contribution of human workers.

*圖片來源:Unsplash*

Looking ahead, the metaphor raises questions about education and retraining. If leaders are to act as managers of multiple AI-informed streams of cognition, organizations must upskill a broad base of employees so they can participate meaningfully in AI-enabled workflows. Universities, corporations, and public institutions will need to collaborate to design curricula and training programs that emphasize data literacy, critical thinking, and ethical reasoning in tandem with technical fluency. The implication is a holistic model of capability-building rather than a narrow focus on software proficiency.

Nevertheless, several concerns warrant closer scrutiny. First, the phrase “infinite minds” risks overpromising or creating unrealistic expectations about AI capabilities. Real-world AI systems have limits, require substantial infrastructure, and may introduce new categories of risk. Second, governance- and accountability-related questions remain thorny. Who bears responsibility for an AI’s decisions? How do we audit and correct errors at scale? What standards govern transparency without compromising security and proprietary interests? Third, there is a potential risk that high-level metaphors could obscure practical governance challenges, such as data governance, model bias, and consent in data usage. It is essential that the metaphor be paired with concrete policy and organizational practices.

Microsoft’s broader AI strategy, including its emphasis on responsible AI, is compatible with Nadella’s Davos message. The company has advocated for fairness, reliability, safety, privacy, and inclusiveness, and it has invested in tools and frameworks intended to help developers build safer AI solutions. Nadella’s metaphor can be seen as a narrative device to rally internal efforts and external partnerships around these principles, while also signaling ambition for large-scale, interoperable AI platforms that enable diverse uses across industries.

In the public sphere, the metaphor invites civil society and regulatory bodies to engage in constructive conversations about the governance of AI-enabled decision-making. As AI systems become more capable of influencing outcomes in sectors like healthcare, finance, and public policy, the need for robust oversight grows more urgent. Nadella’s framing could help shift the discourse toward governance as a core element of AI strategy, rather than an afterthought.

Overall, Nadella’s new metaphor contributes to the ongoing conversation about how to live with AI at scale. It offers a coherent narrative that links technical capability with human stewardship, ethics, and governance. It also places emphasis on the human role in guiding AI, which can help relieve some public concern about machine autonomy by underscoring human oversight and accountability. If adopted widely, this mindset could shape organizational culture, policy development, and educational priorities for years to come.

Perspectives and Impact¶

- Business governance: As AI systems become more integrated into strategic decision-making, executives will need to codify governance practices that ensure accountability, explainability, and risk management. Nadella’s metaphor provides a language for discussing these issues at the highest levels of corporate leadership.

- Workforce implications: The idea of humans managing “infinite minds” underscores the importance of upskilling and reskilling. Employees across roles—from data scientists to frontline managers—will likely engage more deeply with AI-assisted processes, requiring training that emphasizes data literacy, critical thinking, and ethical judgment.

- Policy and regulation: Policymakers are increasingly called to design frameworks that balance innovation with protection. Nadella’s perspective invites dialogue about standards for transparency, safety, and accountability in AI systems, potentially influencing international norms and cross-border cooperation.

- Ethics and trust: The metaphor foregrounds ethical considerations as central to AI adoption. Trust in AI depends on transparent reasoning, auditable outputs, and consistent safeguards against harm. This trust-building is essential for broad societal acceptance of AI technologies.

- Innovation ecosystem: A shift toward human-managed AI networks could drive collaboration among tech firms, academia, and civil society. Shared governance standards and interoperable platforms may accelerate safe experimentation and help diffuse AI benefits more widely.

Future implications include a continued evolution of AI platforms that enable more complex collaboration between humans and machines. This could lead to new organizational roles, such as AI governance officers or AI ethics stewards, dedicated to overseeing model behavior, data quality, and alignment with human values. It may also catalyze more proactive risk assessment frameworks and scenario planning to address potential AI-driven disruptions.

Key Takeaways¶

Main Points:

– Nadella reframes AI leadership as humans managing “infinite minds,” emphasizing governance and ethics.

– The metaphor builds on a historical continuum of computing progress, situating AI as a collaborative tool.

– Practical implementation requires governance, explainability, workforce upskilling, and cross-sector collaboration.

Areas of Concern:

– Overpromising AI capabilities through metaphor without acknowledging limits.

– Ensuring accountability for AI-driven decisions and outputs.

– Addressing workforce displacement and equitable access to AI benefits.

Summary and Recommendations¶

Satya Nadella’s Davos remarks articulate a forward-looking, governance-centered vision for the AI era. By characterizing humanity as “managers of infinite minds,” he shifts the conversation from merely deploying powerful algorithms to designing systems that are auditable, controllable, and aligned with societal values. This metaphor serves as a strategic compass for organizations seeking to harness AI responsibly while pursuing scalable innovation. It foregrounds human oversight, ethical governance, and inclusive benefits as essential components of AI strategy, rather than optional add-ons.

For businesses, the actionable takeaway is clear: embed responsible AI practices into every stage of development and deployment, invest in people through upskilling, and create governance structures that enable transparent decision-making. Engage with policymakers and the public to shape standards that balance innovation with safeguards. Prepare for organizational changes that accompany AI-enabled workflows, and foster a culture of continuous learning and ethical reflection. If the metaphor translates into concrete practice, it could help maximize AI’s positive impact while mitigating risk and public concern.

As the AI landscape continues to evolve, Nadella’s framework provides a way to think about scaling intelligence without losing sight of human values and responsibility. The coming years will test how effectively leaders can translate this aspirational language into tangible governance models, technical safeguards, and inclusive outcomes. The success of this approach will depend on the willingness of organizations to adopt robust governance, invest in people, and participate in a broader, global dialogue about the responsibly designed and widely beneficial deployment of AI technologies.

References¶

- Original: https://www.geekwire.com/2026/satya-nadellas-new-metaphor-for-the-ai-age-we-are-becoming-managers-of-infinite-minds/

- Additional context:

- Microsoft on Responsible AI and governance practices

- World Economic Forum discussions on AI policy and ethics

Forbidden:

– No thinking process or “Thinking…” markers

– Article starts with “## TLDR”

*圖片來源:Unsplash*