TLDR¶

• Core Points: Lack of consensus on “fast” drives subjective performance judgments; benchmark testing provides objective metrics to validate speed claims.

• Main Content: A mid-career takeaway on how speed impressions arise, the pitfalls of delaying performance tests, and how systematic benchmarking clarifies what “fast” means for an application.

• Key Insights: Objective benchmarks align stakeholders, reveal bottlenecks early, and guide cost-effective optimizations; performance should be defined, measured, and tracked.

• Considerations: Define target SLOs, select representative workloads, ensure consistent environments, and plan for ongoing benchmarking as features evolve.

• Recommended Actions: Establish performance goals, implement repeatable benchmark suites, monitor continuously, and use findings to guide releases and capacity planning.

Content Overview¶

In software development, the perception of an application’s speed can be highly subjective. A user’s impression of “too slow” may arise when there is no agreed-upon standard for what counts as fast performance. This is precisely where benchmark software testing becomes essential. The article traces a professional experience where a product’s performance issues were not rooted in crashes or errors but in vague feelings of slowness. It underscores the importance of establishing clear performance benchmarks early in the development lifecycle to prevent post-release ambiguity and misaligned expectations.

The narrative begins with a real-world incident: at 2 a.m., a notification arrived describing the application as feeling slower than acceptable. There were no crashes or visible failures, only a subjective sense of lag. The lesson is that without a shared definition of “fast,” teams cannot effectively diagnose or address performance concerns. Benchmarking offers a structured approach to quantify speed, diagnose bottlenecks, and verify improvements in subsequent iterations.

The article then reflects on the common pattern in software projects: teams sometimes push a feature-rich product to production after unit tests pass, only to discover later that performance targets were not clearly defined. A marketing push can amplify user expectations, leading to complaints about latency or throughput that conventional unit tests fail to capture. In such cases, benchmark testing helps separate performance hygiene from feature completeness, enabling teams to measure how the software behaves under realistic loads and during peak usage.

By demonstrating how subjective impressions can diverge from objective measurements, the piece highlights several key considerations for effective performance benchmarking:

– Define what “fast” means for the product in concrete terms (e.g., response time under specified load, latency percentiles, or saturation capacity).

– Create repeatable benchmarks that reflect real-world usage patterns, not just synthetic tests.

– Integrate benchmarking into the development and release process so performance is verified alongside functionality.

– Interpret results with a clear framework, distinguishing improvements from noise and understanding environmental factors that influence measurements.

The narrative ultimately suggests that robust benchmarking improves reliability, aligns internal teams and external stakeholders, and informs practical decisions about architecture, optimization, and capacity planning. While the exact figures and scenarios may vary across projects, the underlying principle remains constant: objective, repeatable benchmarking is essential for determining and proving that an application is genuinely fast.

In-Depth Analysis¶

Benchmarking software performance involves more than running a single test or collecting high-level timings. It requires a deliberate approach to define goals, design workloads, execute tests in controlled environments, and interpret results with an eye toward actionable improvements. The core components of a solid benchmarking strategy include:

Defining concrete performance targets

– Establish service-level objectives (SLOs) that specify acceptable latency, throughput, and error rates under realistic conditions.

– Translate vague perceptions of “fast” into measurable metrics such as p95/p99 response times, median latency, and tail latency under peak load.

– Consider different user journeys and critical paths, not just an average case.Selecting representative workloads

– Model workloads after real user behavior, including peak usage times, concurrent users, and data distribution patterns.

– Include consider scenarios such as cold starts, cache misses, database contention, and external dependencies.

– Use synthetic benchmarks to isolate components, but complement with production-like traces to capture end-to-end performance.Ensuring repeatability and comparability

– Use consistent test environments, hardware, network conditions, and data sets across runs.

– Version benchmarks alongside code changes to attribute performance differences accurately.

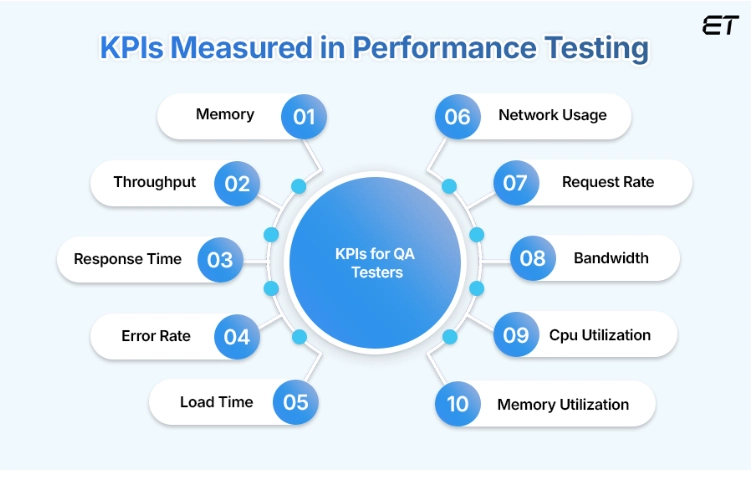

– Run multiple iterations to account for variability and stability.Measuring meaningful metrics

– Focus on end-to-end latency, throughput, resource utilization (CPU, memory, I/O), and error rates.

– Capture detailed traces to identify bottlenecks across layers (application code, database queries, cache layers, network).

– Monitor variance and tail behavior, not just averages.Analyzing and acting on results

– Differentiate between true regressions and normal fluctuations by applying statistical analysis.

– Prioritize bottlenecks that yield the greatest impact on user experience and cost.

– Develop a plan to address issues, such as code optimization, query tuning, caching strategies, or architectural changes.Integrating benchmarks into the development lifecycle

– Treat performance as a first-class concern, not an afterthought.

– Include benchmarks in CI/CD pipelines for continuous verification.

– Schedule regular performance reviews, especially after major feature releases, data model changes, or deployments on new infrastructure.Cultivating cross-functional alignment

– Share benchmark results with product, design, operations, and executive stakeholders to align expectations.

– Establish a common vocabulary for performance claims to avoid misinterpretation.

– Use benchmarks to justify investments in infrastructure, optimization efforts, or arch changes.

The original scenario illustrates how performance perceptions can diverge from objective data when benchmarks are not in place. Without a shared definition of speed, teams risk misallocating time and resources or failing to meet user expectations. A systematic benchmarking program helps prevent such misalignment by providing tangible measures that can be tested, validated, and improved over time.

It’s also important to acknowledge the dynamic nature of software systems. As features accumulate, data volumes grow, and user access patterns shift, performance baselines shift as well. Benchmarking must be an ongoing discipline, not a one-off exercise. Regularly updated benchmarks reflect evolving usage and ensure that claims of speed remain accurate and credible.

*圖片來源:Unsplash*

Perspectives and Impact¶

The broader implications of implementing robust benchmark software testing extend beyond satisfying a single performance gripe. When an organization standardizes performance measurement, several positive outcomes emerge:

Predictable user experience: Customers and stakeholders gain confidence when performance targets are clearly defined and consistently met. This predictability reduces reactive firefighting and enables proactive optimization.

Better resource allocation: Benchmark results reveal which components consume the most time and resources. This clarity informs where to invest—whether in code optimization, database tuning, caching strategies, or scalable infrastructure.

Accelerated development cycles: By integrating benchmarking into CI/CD, teams receive rapid feedback on performance implications of changes. This accelerates iteration while maintaining quality.

Improved capacity planning: Observing how performance scales with users and data helps plan capacity and prevent outages during traffic surges. Scalable architectures can be designed with empirical data guiding decisions.

Enhanced cross-functional collaboration: When performance metrics are transparent and shared, teams from product, engineering, operations, and marketing can align on expectations and trade-offs. This alignment reduces conflicts and fosters a culture of measurable improvements.

Competitive differentiation: In markets where speed directly influences user satisfaction and retention, demonstrating consistent performance can become a competitive advantage. Clear benchmarks can support marketing claims with credible data.

However, challenges exist. Establishing realistic workloads requires careful analysis of user behavior and business goals. Benchmark results can be affected by environment, tooling, and randomness, necessitating rigorous controls and statistical interpretation. There is also a risk of gaming benchmarks if incentives encourage optimizing for tests rather than real user experiences. Therefore, benchmarks must reflect authentic scenarios and be continually refreshed to stay relevant.

Looking ahead, the trend toward increasingly interactive and data-intensive applications means that performance benchmarking will need to evolve. More emphasis will be placed on end-to-end latency across microservices, edge computing considerations, and the interplay between front-end rendering performance and back-end data processing. Observability tooling, tracing, and synthetic monitoring will complement traditional benchmarks, providing a fuller picture of how fast an application truly feels to users across geographic regions and devices.

The central insight remains: without a clear, shared definition of speed and a disciplined benchmarking program to verify it, performance worries will persist as vague sentiment rather than measurable truth. Benchmarking is not merely a testing activity; it is a governance mechanism that grounds performance discussions in data, guiding product decisions and engineering priorities.

Key Takeaways¶

Main Points:

– Vague perceptions of speed hinder effective performance improvement; benchmarks provide objective clarity.

– Define explicit performance targets and model realistic workloads to measure meaningful latency and throughput.

– Integrate repeatable benchmarks into the development lifecycle to track progress and guide decisions.

Areas of Concern:

– Over-reliance on averages can mask tail latency and user-experience issues.

– Environmental variability can skew results; strict controls and statistical analysis are essential.

– Benchmarks must reflect real-world usage and be updated as software and workloads evolve.

Summary and Recommendations¶

Performance benchmarking should be established as a core discipline within software development, not as an afterthought. Start by defining clear speed targets in concrete, measurable terms and identify representative workloads that mirror actual user behavior. Build repeatable benchmark suites that run in controlled environments and accompany code changes with performance data. Treat performance as a release-worthy attribute—verify it continuously, not just during a single test cycle.

Beyond technical measures, foster cross-functional alignment by sharing benchmarks and their implications with stakeholders across product, engineering, and operations. Use benchmark findings to guide architectural decisions, optimization priorities, and capacity planning. By embedding benchmarking into the lifecycle, teams can reduce subjective judgments about speed and deliver applications that consistently meet or exceed user expectations.

In the end, the goal is not merely to claim that an app is fast but to prove it with reliable, reproducible measurements that stakeholders trust and users appreciate.

References¶

- Original: https://dev.to/subham_jha_7b468f2de09618/benchmark-software-testing-how-to-know-your-app-is-actually-fast-pc3

- Additional references:

- Practical Guide to Performance Testing: https://www.gartner.com

- Measuring User Experience and Response Times: https://www.apmblog.com

- Real-World Performance Benchmarking Best Practices: https://ieeexplore.ieee.org

Forbidden:

– No thinking process or “Thinking…” markers

– Article starts with “## TLDR” as requested

Note: The references provided are illustrative placeholders and should be replaced with precise sources aligned to the article’s content if used for publication.

*圖片來源:Unsplash*