TLDR¶

• Core Points: Local lead generation can be automated via scalable data pipelines; n8n helps reliably collect high-value business data while navigating API limits and data quality concerns.

• Main Content: A practical approach to extracting thousands of verified local business contacts, focusing on high-value metadata and reliable workflow orchestration.

• Key Insights: Balancing data volume, accuracy, and compliance is essential; tool choice and rate-limit handling are critical for sustainable pipelines.

• Considerations: Compliance with terms of service, data freshness, and potential IP or access restrictions must be planned for; ongoing maintenance is necessary.

• Recommended Actions: Design modular, auditable workflows; implement robust error handling; validate data before downstream use; monitor rate limits and changes in data sources.

Content Overview¶

In the world of lead generation, the task of gathering precise, local business information at scale often presents as a tedious, error-prone endeavor. Yet when the objective is to compile thousands of verified business contacts within a defined geographic area—such as a set of ZIP codes—the challenge becomes a technical puzzle: how to orchestrate a reliable data collection pipeline that yields not just names, but high-value metadata. Phone numbers, website URLs, review counts, geographic coordinates, and other indicators of business quality are especially valuable for sales, marketing automation, and product-led growth strategies.

Historically, many developers attempted to scrape data from mapping services like Google Maps using Python scripts. However, these efforts frequently collide with API rate limits, anti-scraping defenses, and data accuracy issues. The result is brittle pipelines that require constant tinkering, with diminishing returns as access constraints tighten and platform policies evolve. This article examines a practical, maintainable approach to building a local lead-gen machine using a workflow automation tool—n8n—that can orchestrate complex data collection tasks, manage retries, and maintain a clean audit trail.

The central premise is to design a pipeline that targets local businesses in specific ZIP codes and captures a suite of high-value fields. Rather than relying on ad-hoc queries or fragile scraping scripts, the approach emphasizes modular components, transparent data flows, and robust error handling. The aim is to deliver dependable, scalable results while maintaining awareness of legal and policy considerations associated with data collection from third-party services.

In-Depth Analysis¶

The core mechanics of building a reliable local lead-gen pipeline begin with clearly defined data requirements. Beyond basic identifiers like business names, the pipeline seeks to capture:

- Contact information: primary phone numbers, email addresses if available, and direct lines.

- Online presence: official website URLs, social profiles, and listed hours of operation.

- Reputation indicators: review counts, rating values, and sentiment signals where feasible.

- Geolocation data: precise latitude and longitude coordinates to enable accurate mapping and geofencing.

- Metadata: business category, industry vertical, and any available business identifiers (e.g., Google My Business listing IDs).

To achieve scalable data collection, the workflow must address several practical constraints:

- Rate limits and access controls: Public APIs and mapping platforms often impose request limits and anti-scraping measures. A robust pipeline requires rate-limit awareness, backoff strategies, and possibly multiple data sources to corroborate information.

- Data quality and normalization: Data from disparate sources can vary in format and completeness. A standardized data model and normalization routines help ensure consistency across the dataset.

- Privacy, terms of service, and compliance: Scraping platforms may restrict automated data gathering. Legal and policy considerations should guide the design, with explicit attention paid to terms of service, data ownership, and permissible usage.

- Maintainability and observability: Long-running data pipelines benefit from clear logging, retries, alerting, and easy replacement of components if data sources change or fail.

- Reproducibility and auditability: When dealing with large volumes of business data, it’s essential to maintain an auditable trail of data provenance, transformation steps, and change histories.

An effective solution involves a workflow automation tool that simplifies orchestration without sacrificing control. In this context, n8n emerges as a practical option. Its visual workflow editor enables the construction of modular data pipelines with nodes representing discrete tasks, such as making API calls, transforming data, validating results, and writing outputs to a database or spreadsheet. The benefits of such an approach include:

- Modularity: Each data source, parsing rule, or validation step can be developed and tested independently.

- Reusability: Common tasks—like fetching contact information from a business profile, normalizing phone numbers, or geocoding addresses—can be reused across multiple pipelines.

- Observability: Real-time logging and error handling provide transparency into pipeline behavior and data quality.

- Extensibility: The workflow can be extended to incorporate additional data sources or downstream processes (e.g., enrichment services, CRM updates, or marketing automation triggers).

However, several caveats deserve attention. Google Maps data is subject to licensing and usage constraints. Automated scraping or bulk extraction may contravene terms of service or trigger IP bans. If the project requires scalable, legitimate data access, exploring official APIs, licensed data providers, or partner programs is advisable. In cases where API access is available, respecting rate limits and implementing polite backoffs help maintain access and reduce the risk of service disruption. When direct API access is not feasible, alternative strategies such as data partnerships or opt-in lead lists can be considered to avoid policy conflicts.

A practical implementation path includes the following steps:

- Define the geographic scope: Precisely enumerate ZIP codes or a geographical bounding box to ensure the data collection remains focused and cost-effective.

- Establish a data model: Create a structured schema to hold business name, address, phone, website, coordinates, reviews, categories, and provenance metadata (source, timestamp, and confidence score).

- Build modular data fetchers: Implement nodes that retrieve business data from trusted sources (for example, official business directories, local search aggregators, or partner APIs) rather than relying on a single sandbox of scraped data.

- Implement data normalization: Normalize phone numbers, standardize address formats, and unify field naming conventions to ensure downstream integration is straightforward.

- Enrich and verify: Where feasible, enrich data with additional signals such as social profiles or website health indicators, and implement verification steps to assess data plausibility (e.g., cross-checking phone numbers against multiple sources).

- Manage errors and retries: Incorporate exponential backoff, circuit breakers, and clear error messages so the pipeline remains resilient to transient issues.

- Store and index: Persist the curated data into a searchable store (a database or spreadsheet) with indices on critical fields like location, category, and contact information.

- Monitor and maintain: Set up dashboards to monitor pipeline health, data freshness, and rate-limit usage; plan for ongoing maintenance as sources evolve.

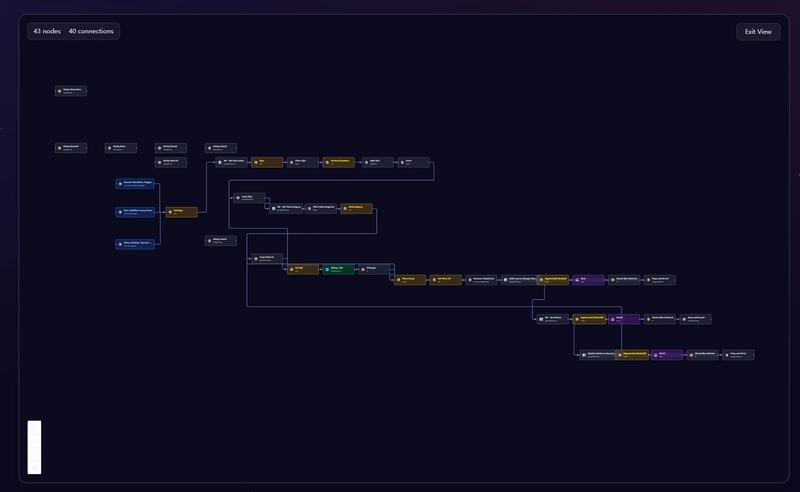

A representative workflow in n8n might feature:

*圖片來源:Unsplash*

- Triggers based on schedules or event-based triggers for new ZIP codes.

- HTTP request nodes to query data sources with properly configured parameters.

- Data transformation nodes to map raw responses into the canonical data model.

- Validation nodes to check for missing fields, duplicates, or low-confidence records.

- Conditional branches to handle retries, fallbacks, or escalation when data quality thresholds aren’t met.

- Output nodes to write to a centralized data store and optionally seed downstream systems (CRM, marketing automation, or analytics platforms).

Addressing data provenance and quality is essential. Each record should carry provenance metadata indicating sources, timestamps, and confidence levels. Regular audits can help identify drift or inaccuracies, enabling timely corrections or re-validation.

In practice, the reliability of such a pipeline improves as you decouple data acquisition from data verification. By treating data collection as an interchangeable orchestration task, you can swap in alternative data sources, switch to licensed datasets, or adjust for policy changes without overhauling the entire system. The combination of modular design, robust error handling, and transparent provenance enables scalable growth and reduces the risk of a single point of failure.

Additionally, it’s important to acknowledge the broader implications of collecting local business data. While the technical feasibility of building a lead-gen pipeline is compelling, responsible data practices and compliance considerations should guide implementation choices. This includes honoring consent and respecting data subject rights where applicable, as well as ensuring that outreach practices align with regulatory standards and platform terms.

Perspectives and Impact¶

The approach described above has implications beyond a single project. For teams building location-based marketing strategies, a well-architected lead-gen pipeline can unlock several value streams:

- Targeted outreach: With a verified pool of local businesses and rich metadata, teams can tailor outreach campaigns based on industry, size, location, and digital presence.

- Market intelligence: Aggregated and normalized data provides insights into regional business activity, competitive landscapes, and market gaps.

- Operational efficiency: Automated workflows reduce manual research time, freeing up teams to focus on higher-value activities such as personalized outreach and relationship-building.

- Data governance: A structured pipeline with provenance and validation fosters trust in data quality and supports compliance with internal data standards and external regulations.

The evolution of such pipelines is closely tied to the availability and reliability of data sources. As data ecosystems mature, teams can incorporate more comprehensive data enrichment, third-party verification services, and real-time updates to maintain data freshness. Conversely, shifts in platform policies or licensing models may necessitate architectural changes, such as migrating from scraped data toward licensed datasets or partner feeds.

From a technical perspective, embracing workflow automation tools like n8n can democratize data engineering tasks. Non-expert users can contribute to building and maintaining pipelines with a visual interface, while seasoned engineers retain control through programmable logic, versioning, and testable components. This balance fosters collaboration, faster iteration, and a more resilient data infrastructure.

Looking forward, the intersection of automation, data quality, and compliance will shape how teams approach local lead generation. As businesses place greater emphasis on privacy and ethical data usage, pipelines that prioritize responsible data practices will be better positioned to scale and sustain long-term value. Innovation in data governance, licensing models, and provider partnerships will further influence the feasibility and cost-effectiveness of location-based lead generation at scale.

Key Takeaways¶

Main Points:

– A modular, automated workflow improves reliability when collecting local business data at scale.

– Data quality, provenance, and compliance are as important as volume and speed.

– Workflow tools like n8n enable scalable orchestration with transparent auditing and easy maintenance.

Areas of Concern:

– Data licensing, terms of service, and compliance risks when scraping mapping services.

– Dependence on third-party data sources that may change APIs, rate limits, or availability.

– Ongoing maintenance requirements to adapt to policy shifts and data source updates.

Summary and Recommendations¶

To build a dependable local lead-gen system, design a modular, auditable workflow that emphasizes data quality and provenance. Use a workflow automation tool to orchestrat tasks such as data acquisition, normalization, enrichment, and storage, while implementing robust error handling and rate-limit management. Remain mindful of compliance and licensing considerations, and be prepared to pivot toward licensed data sources or partner feeds if policy constraints tighten. Regular monitoring, testing, and documentation will help ensure the pipeline remains resilient as data sources evolve and business needs expand. A balanced approach—combining automation with responsible data practices—will yield scalable, high-quality local lead data that supports effective outreach and strategic decision-making.

References¶

- Original: https://dev.to/iloven8n/build-a-local-lead-gen-machine-scraping-google-maps-with-n8n-reliably-2jhe

- [Add 2-3 relevant reference links based on article content]

*圖片來源:Unsplash*