TLDR¶

• Core Points: Musk announces that X will release the new algorithm’s entire code for organic and advertising post recommendations as open source within seven days.

• Main Content: The move follows ongoing scrutiny of X’s recommendation system by France and the European Commission, which has extended a data retention order through 2026.

• Key Insights: Open-sourcing the algorithm aims to increase transparency but may raise questions about security, monetization, and platform governance.

• Considerations: Regulators, developers, and advertisers will watch for how open sourcing affects moderation, bias, and trust in recommendations.

• Recommended Actions: Stakeholders should prepare for the release by assessing governance, safety checks, and documentation; monitor implementation and external audits.

Content Overview¶

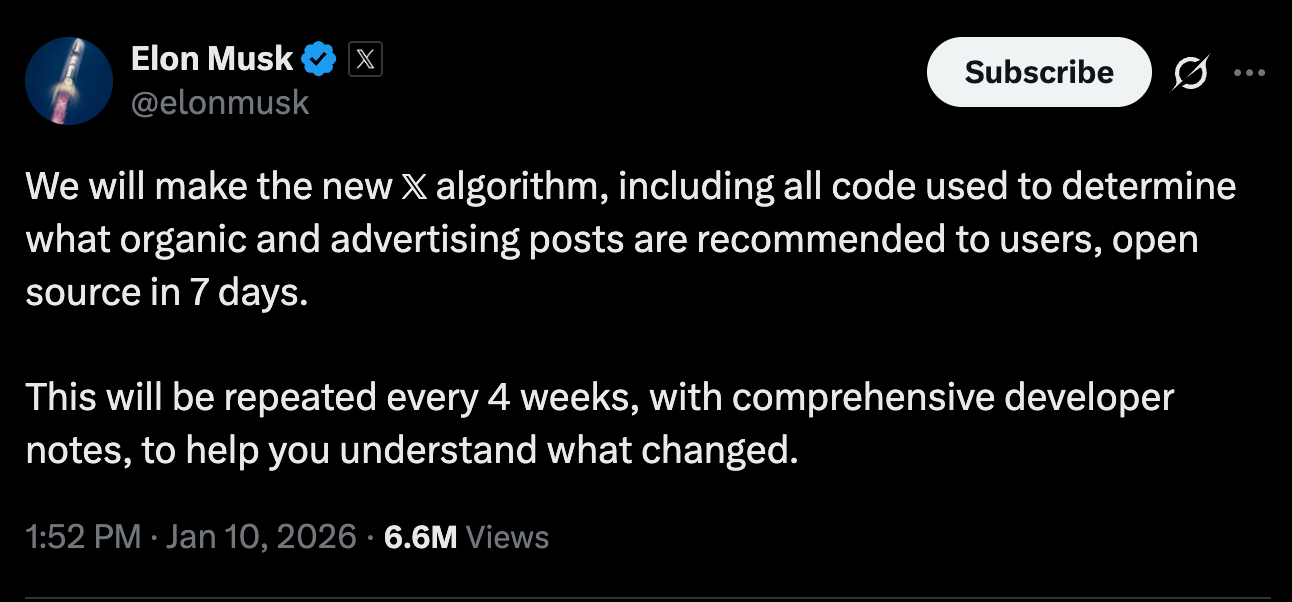

X, formerly known as Twitter, is preparing to make its recommendation algorithm open source. On a recent Saturday, Elon Musk announced on the platform that X would release “the new X algorithm, including all code used to determine what organic and advertising posts are recommended to users, open source in 7 days.” This statement situates the platform at a critical juncture as it faces increasing regulatory and public attention over how content is prioritized and promoted to users.

The development comes amid regulatory scrutiny from international authorities, notably France and the European Commission (EC). The EC has been investigating X’s handling of user data and how its algorithm might influence content exposure. In a related move, the EC recently extended a retention order through 2026, requiring X to preserve certain data streams for regulatory review. The specifics of the order, including what datasets are retained and under which conditions, have implications for privacy, data governance, and the ability of regulators to audit platform practices.

Musk’s commitment to open sourcing the algorithm signals a shift toward greater transparency. However, it also raises questions about how the disclosure will be structured, what accompanying documentation will be provided, and how external developers will engage with the code. Critics and supporters alike will be looking for details on aspects such as data sources, feature engineering, fairness and bias mitigations, rate limits, and the interplay between organic and paid recommendations.

As X prepares for this release, industry observers note that open-sourcing a complex recommendation system is a multi-faceted undertaking. Beyond simply providing code, it requires robust governance processes, rigorous security assessments, and clear licensing terms to ensure the code can be inspected, reused, and improved by external researchers and developers without compromising user safety or platform integrity. The timing of the release will be watched for how it aligns with ongoing regulatory obligations and the platform’s business model, including advertising revenue and content moderation.

This development also invites broader discussion about transparency in online platforms. Open-source availability could empower researchers to study how the algorithm propagates content, detect potential biases, and propose improvements. At the same time, it could surface new risks, such as adversarial use of the code to manipulate recommendations or attempt to exploit vulnerabilities in the system. Stakeholders will be keen to see whether X provides practical guidelines, code comments, test suites, and telemetry data that facilitate responsible examination by third parties.

In summary, X’s plan to open source its algorithm marks a notable step in the ongoing debate over transparency and accountability in social media platforms. The next few weeks will reveal how comprehensively the release is implemented and what safeguards accompany access to the code. Regulators, researchers, advertisers, and users will be watching closely to understand the impact on content discovery, user trust, and platform governance.

In-Depth Analysis¶

The announcement by Elon Musk places X at a crossroads between operational secrecy and public accountability. By pledging to open source the full algorithm that governs both organic and advertising content recommendations, X is signaling an intent to invite external scrutiny and collaborative improvement. This could potentially accelerate the identification and remediation of biases, echo chambers, or other unintended consequences of recommendation logic that many researchers have highlighted in the past.

From a regulatory perspective, the European Commission’s ongoing inquiries into X’s data handling and algorithmic decision-making are central to understanding the potential impact of the open-source move. The EC’s decision to extend a data retention order through 2026 indicates that regulators are not stepping back from their examination of how user interactions and platform behaviors are recorded, stored, and potentially used for enforcement or research. The combined effect of an open-source approach and continued regulatory oversight may establish a framework in which platform operators balance transparency with compliance, security, and user privacy.

Transparency through open source could enable a broader community of researchers to audit the algorithm’s behavior, assess fairness across different user groups, and verify claims about how content is prioritized. Such engagement could foster trust among users who have concerns about algorithmic manipulation or opaque decision-making processes. It could also help advertisers understand the mechanics of ad exposure and how paid content competes with organic posts, potentially influencing how campaigns are designed and measured on the platform.

However, releasing the entire codebase for a proprietary recommendation system is not without risks. Open sourcing may expose critical internal design choices, security vulnerabilities, and potential weaknesses that malicious actors could exploit if not properly mitigated. To address these concerns, X would need to provide comprehensive documentation, secure licensing terms, and rigorous test suites that demonstrate the algorithm’s behavior under a range of scenarios. It would also be prudent to publish governance mechanisms that describe how external contributions will be reviewed, integrated, and validated before becoming part of the official codebase.

The interplay between organic and advertising content in a single recommendation framework is complex. The algorithm typically uses signals derived from user behavior, content metadata, advertiser constraints, and platform goals (e.g., engagement, retention, revenue). Open-sourcing the algorithm would make visible these signals and the weighting schemes applied to different factors. For researchers and practitioners, access to such information is valuable for understanding potential feedback loops that may amplify or dampen certain types of content, including political or socially relevant material.

One of the central questions will be how X plans to manage user privacy while making the algorithm transparent. Even with open source, many platform components—such as user data pipelines, data aggregation methods, and telemetry—could require redaction or restricted access to protect individual privacy. The degree to which data handling practices are separated from the algorithm logic will influence how effectively external observers can test for bias and performance without compromising user confidentiality.

The practicalities of an open-source release also depend on the architecture of the codebase. Large-scale recommendation systems often involve microservices, distributed data processing, and machine learning pipelines that span multiple services and data environments. Providing a cohesive, usable, and secure open-source artifact requires careful packaging, clear interfaces, and robust documentation so external teams can build upon the code without risking instability in the production environment.

*圖片來源:Unsplash*

In the broader industry context, many technology firms have embraced open-source initiatives to varying degrees, with mixed outcomes. Some have found that releasing components of their technology stack can drive innovation, improve security through external scrutiny, and attract developers who contribute to the ecosystem. Others have faced challenges related to maintaining compatibility, licensing conflicts, and the potential erosion of competitive advantages. X’s decision will be observed for its ability to strike a balance between inviting external collaboration and preserving the core strategic advantages of its recommendation framework.

From a governance perspective, the open-source release could influence content moderation standards and platform policies. If researchers can validate biases or discriminatory tendencies within the algorithm, regulators and civil society groups may push for guideline refinements or new policy measures. Conversely, a transparent system could reduce the scope for contested claims about hidden biases, allowing for more precise, evidence-based discussions about platform effects on public discourse.

Looking ahead, the long-term implications of an open-source algorithm on X’s platform health and user experience remain to be seen. The timing of the release, the scope of the code included, and the quality of accompanying documentation will shape the trajectory of this initiative. If successful, open sourcing could become a blueprint for other platforms grappling with similar questions of transparency and accountability in algorithmic curation. If not managed carefully, it could lead to misinterpretations, security concerns, or disputes over code ownership and control.

In this evolving landscape, stakeholders should consider establishing formal channels for ongoing collaboration between X, researchers, regulators, and industry groups. Regular audits, third-party reviews, and standardized benchmarks could help maintain confidence in the platform while ensuring that improvements to the algorithm are both verifiable and socially responsible. The open-source release also provides an opportunity for education and public understanding of how complex recommendation systems operate, demystifying some of the processes behind what users see on their screens.

Perspectives and Impact¶

- Regulators and policymakers: The open-source move could facilitate more robust auditability of X’s recommendation system, helping authorities assess fairness, bias, and potential manipulation. It may prompt clearer guidelines on data handling, transparency, and algorithmic accountability across platforms.

- Researchers and developers: Open access to the code invites rigorous academic and practical scrutiny. Teams can replicate experiments, test for unintended consequences, and propose improvements that could benefit the broader ecosystem.

- Advertisers: Clearer visibility into how the algorithm weighs organic versus paid content may influence campaign design, performance metrics, and measurement approaches. Advertisers could advocate for more predictable exposure patterns or safeguards against undue amplification.

- Users: Greater transparency about why certain posts appear in feeds could improve trust and satisfaction. However, users may also be exposed to more technical explanations or experiences of algorithmic changes that affect their feeds.

- The platform’s business model: Open-sourcing the algorithm could enhance the platform’s credibility but may also affect competitive differentiation. It could drive innovation and collaboration while necessitating investments in governance, security, and compliance.

Future implications include whether other platforms follow suit to publish their recommendation systems, potentially leading to a new standard for transparency in social media curation. The balance between openness and competitive advantage will likely determine how this experiment influences platform strategy, user experience, and regulatory interactions in the coming years.

Key Takeaways¶

Main Points:

– Elon Musk announced that X will open source its new recommendation algorithm within seven days.

– The move occurs amid regulatory scrutiny from France and the European Commission, including a data retention order extended through 2026.

– Open sourcing could promote transparency and external validation but requires careful governance and security measures.

Areas of Concern:

– Potential security vulnerabilities revealed by publishing internal code.

– Risks to user privacy and data handling in tandem with open-source access.

– Possible impact on competitive advantage and monetization strategies.

Summary and Recommendations¶

X’s commitment to open sourcing its new algorithm marks a significant step in the broader push toward transparency in social media platforms. By providing the entire codebase used to determine what organic and advertising posts are recommended to users, X aims to invite external scrutiny, foster collaboration, and potentially accelerate improvements in fairness, bias mitigation, and overall user experience. The regulatory backdrop, particularly the European Commission’s extended data retention order, underscores the importance of robust governance and privacy protections as part of the disclosure.

To maximize the positive impact of this move, X should accompany the release with comprehensive documentation, clear licensing, and a well-defined process for external contributions, audits, and security testing. Accessibility to the code should be balanced with privacy safeguards and a transparency roadmap that outlines how findings will be addressed. Regulators will likely assess whether the open-source approach strengthens accountability without compromising user protection or platform integrity.

For researchers, practitioners, advertisers, and users, the open-source release presents a valuable opportunity to understand and influence the mechanics behind content discovery. The success of this initiative will depend on the quality of the documentation, the robustness of governance around external participation, and the platform’s ability to implement improvements that reflect a broad range of stakeholder perspectives. Continuous monitoring, third-party audits, and ongoing dialogue with regulatory bodies will be essential to ensure that openness translates into tangible benefits for user trust, safety, and platform health.

In sum, the open-source release of X’s algorithm represents a bold experiment in computing transparency for a high-stakes social platform. The next steps will reveal how well the initiative is executed, how it affects regulatory relationships, and whether it leads to lasting improvements in how content is curated and perceived by millions of users worldwide.

References¶

- Original: https://www.engadget.com/big-tech/elon-musk-says-xs-new-algorithm-will-be-made-open-source-next-week-225721656.html?src=rss

- Additional references to regulatory actions and industry context (to be added by the author):

- European Commission data governance and platform transparency initiatives

- Studies on algorithmic fairness and recommendation systems in social media

- Industry analyses of open-source strategies for large-scale platforms

*圖片來源:Unsplash*