TLDR¶

• Core Points: AI agents differ in how they decide and sequence actions; some follow rigid workflows, others generate autonomous steps toward a goal.

• Main Content: Understanding decision-making processes and action ordering is essential to grasp AI agents’ real behavior.

• Key Insights: The architecture behind an AI agent—whether deterministic or goal-driven—shapes reliability, adaptability, and risk.

• Considerations: Trade-offs exist between strict control and autonomous flexibility; governance and containment are critical.

• Recommended Actions: Assess systems by decision chains and contingencies, not only models or demonstrations; design with safeguards and evaluation criteria.

Content Overview¶

AI agents have moved beyond flashy demos and polished toolkits to reveal the core mechanics that drive their behavior. This article, Part II of a three-part series, emphasizes a foundational distinction: the difference between agents that execute predefined sequences and those that operate by deriving steps to reach a given objective. The choice between strict workflows and autonomous planning fundamentally influences how an AI agent responds to tasks, handles uncertainty, and coexists with human operators. By focusing on where decisions are made and how actions are ordered, we gain a clearer understanding of the entire agent lifecycle—from intent to execution to evaluation.

In Part I, readers were introduced to the landscape of AI agents and the taxonomy of capabilities that underlie them. Part II builds on that foundation by dissecting the decision-making processes that determine how an agent behaves in practice. The emphasis is not on the latest model or the most impressive demo, but on the mechanisms that translate goals into concrete actions, why those mechanisms matter, and how they shape real-world outcomes. Part III will synthesize these ideas with practical guidance, benchmarks, and governance considerations for deploying AI agents responsibly at scale.

In-Depth Analysis¶

The core question driving AI agent design is how decisions are made and in what order actions occur. Broadly, there are two architectural philosophies:

1) Strict workflow-driven agents

2) Goal-driven, autonomous planning agents

Strict workflow-driven agents operate within a defined sequence. They are given a task and a rigid plan or a set of rule-based steps to execute. This approach emphasizes predictability, traceability, and control. Each action follows a predetermined path, with checkpoints that validate progress before moving forward. In environments where safety, compliance, and audibility are paramount, such deterministic behavior can be advantageous. The trade-off, however, is flexibility: when new information arises or when a plan fails mid-execution, the system may struggle to adapt without human intervention or a pre-programmed exception-handling script.

Autonomous planning agents, in contrast, are designed to generate and sequence their own steps toward a goal. They interpret a broad objective, assess available capabilities, constraints, and context, and then formulate a plan to achieve the objective. This planning process can dynamically incorporate new data, re-evaluate assumptions, and re-prioritize actions as needed. The strength of autonomous agents lies in adaptability, exploration, and the potential to optimize for long-horizon outcomes. The downside is increased complexity, harder predictability, and heightened need for safeguards to prevent undesired or unsafe behaviors. Without careful constraints, autonomous agents may pursue subgoals or trajectories that diverge from the intended use or violate ethical guidelines.

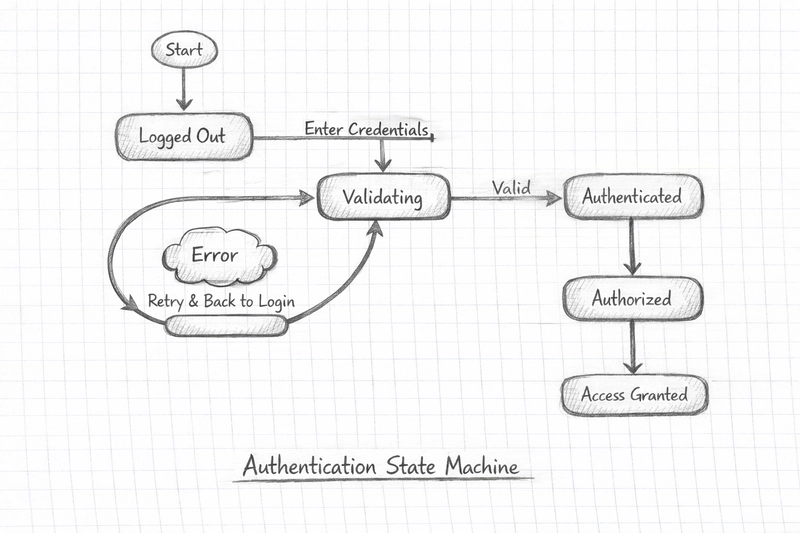

A practical way to think about these differences is to view the agent’s architecture as a decision-making backbone. In workflow-driven models, the backbone is a fixed choreography: actors (or subcomponents) perform predefined steps in a specified order. In autonomous agents, the backbone is a planner and a decision policy that continuously selects next actions based on current state, goals, and observed results. The interaction between perception, reasoning, and action becomes the essential loop: perceive the environment, reason about possible actions and their consequences, decide on a course of action, and execute. Feedback from the environment then informs future decisions, creating a closed loop that can adjust to changing circumstances.

Both approaches can be enhanced by modular design patterns. Common building blocks include:

- Perception modules: gather state information from the environment, sensor inputs, or user intent.

- Knowledge and reasoning modules: store world models, rules, and inferential capabilities; may employ probabilistic reasoning, planning algorithms, or decision trees.

- Action modules: interface with tools, systems, or actuators to carry out tasks.

- Monitoring and safety layers: oversee actions, enforce constraints, and detect anomalies or policy violations.

- Evaluation and learning components: assess performance, update models, and refine decision criteria over time.

A critical factor in real systems is how these components interact. The same agent may operate on a spectrum between deterministic workflows and autonomous planning. Some deployments adopt hybrid approaches, using strict workflows for routine tasks while enabling autonomous planning for exceptional or open-ended situations. Hybrid designs aim to balance reliability and flexibility, offering predictable behavior for core operations and adaptive problem-solving for complex, real-world challenges.

The decision-making process is also influenced by constraints such as latency, resource limits, and user expectations. In time-sensitive settings, minimizing latency and maintaining transparency about the agent’s reasoning are common priorities. In safety-critical applications, explicit containment and governance mechanisms—like constraint checks, escalation paths, and audit logs—are essential to ensure safe and accountable operation.

A common pitfall in both paradigms is over-reliance on the perceived intelligence of the model without sufficient attention to governance, evaluation, and risk mitigation. Even the most advanced language models can generate plausible but incorrect outputs, hallucinate information, or fail to consider secondary consequences. Therefore, effective AI agents combine robust decision-making structures with continuous monitoring, testing, and human oversight where appropriate.

From a systems perspective, the composition of an agent’s decision loop determines its behavior under uncertainty. In a strict workflow, uncertainty is often mitigated by reliance on human-in-the-loop checks, fixed contingencies, and predefined exception handling. In autonomous planning, uncertainty is addressed through probabilistic reasoning, plan revision, and fallback strategies. The design choice affects not only performance metrics but also the level of interpretability and trust that users place in the system.

*圖片來源:Unsplash*

Finally, deployment considerations matter. Agents must be built with transparent interfaces and clear expectations about what they can and cannot do. Documentation should articulate the decision logic, the sequence of actions for typical tasks, and the safeguards that govern unexpected situations. Continuous monitoring and evaluation—through metrics like task success rate, deviation from plan, and incident counts—are essential to maintain reliability as the system evolves.

Perspectives and Impact¶

The distinction between workflow-driven and autonomous agents has broad implications for industry, research, and policy. For organizations, the choice affects deployment speed, maintainability, and risk exposure. Workflow-driven agents can be rolled out quickly in stable environments where tasks are well-defined and change is infrequent. They benefit from easier validation, traceability, and governance, which can simplify regulatory compliance and audits. However, rigid workflows may hinder adaptation to evolving needs or unforeseen scenarios, potentially limiting long-term value.

Autonomous planning agents promise greater adaptability and potential productivity gains in dynamic settings. They can optimize for efficiency, discover novel approaches, and learn from feedback. Yet their complexity demands robust safety frameworks, rigorous evaluation, and clear escalation paths. Without these safeguards, the same autonomy that yields benefits can also introduce risks such as goal drift, unintended side effects, or violations of constraints.

From a research perspective, the ongoing challenge is to design agent architectures that combine the strengths of both approaches. This includes hybrid systems that maintain strict oversight for critical functions while enabling autonomous reasoning where appropriate. Advances in explainability, verifiability, and controllable autonomy are particularly relevant, as they help bridge the gap between performance and accountability. In education and workforce development, there is a need to prepare practitioners to reason about decision chains, not just outcomes or toolkits. Understanding how actions are sequenced and why they are chosen is essential for responsible design, deployment, and governance of AI agents.

Policy and governance considerations are equally important. Regulators and organizations must define clear norms around safety, privacy, accountability, and transparency for AI agents. This includes establishing standards for auditability, risk assessment, and containment strategies that apply across different architectures. The goal is to enable innovation while maintaining trust and safeguarding stakeholders from harm or unintended consequences.

In practical applications across sectors—customer support, operations, research assistance, autonomous systems, and more—the choice between workflow rigidity and autonomous planning will continue to shape outcomes. The most effective deployments are likely to be those that explicitly address how decisions are made, how actions are ordered, and how safety and accountability are maintained throughout the agent’s lifecycle.

Looking ahead, a key trend is the emergence of more sophisticated governance tools that sit above or alongside agent planners. These tools help organizations specify acceptable goals, constrain permissible actions, and monitor real-time behavior. As agents become more integrated into complex workflows, the ability to reason about, audit, and adjust their decision-making processes will be as important as the agents’ raw capabilities.

Key Takeaways¶

Main Points:

– The core distinction between AI agents lies in how decisions are made and how actions are ordered.

– Workflow-driven agents emphasize predictability and control, while autonomous planning agents emphasize adaptability and goal-direction.

– Hybrid designs can harness the benefits of both modalities, balancing reliability with flexibility.

Areas of Concern:

– Autonomy introduces complexity, risk of goal drift, and potential safety concerns without proper safeguards.

– Ensuring transparency, auditability, and governance is essential for responsible deployment.

– Over-reliance on model capability without robust decision frameworks can lead to unsafe or suboptimal outcomes.

Summary and Recommendations¶

AI agents function as decision-making machines that translate goals into a sequence of actions. Understanding whether an agent is following a strict workflow or autonomously planning steps toward a goal is fundamental to predicting its behavior, assessing risk, and designing appropriate governance. Organizations should evaluate agent deployments not solely by model performance or impressive capabilities, but by examining the decision chains, the ordering of actions, and the safeguards that ensure safe, accountable operation.

For practical deployment, consider adopting a hybrid architecture that preserves necessary control for high-risk tasks while allowing autonomous reasoning in less critical areas. Implement robust safety layers, explicit escalation pathways, and continuous monitoring to detect deviations from desired behavior. Invest in explainability and auditability so operators can understand and challenge the agent’s decisions when needed. Finally, align agent design with organizational goals, regulatory requirements, and ethical considerations to maximize value while maintaining trust.

References¶

- Original: https://dev.to/jefreesujit/from-workflows-to-autonomous-agents-how-ai-agents-actually-work-4nlp

- Additional references:

- OpenAI safety and governance guidelines for autonomous systems

- Standards for AI explainability and auditing (NIST AI RMF or equivalent)

- Research on hybrid human-in-the-loop and hybrid autonomy architectures

*圖片來源:Unsplash*