TLDR¶

• Core Features: Ambitious, ongoing capacity expansion plan to scale Google’s AI infrastructure at a thousandfold pace over five years.

• Main Advantages: Proactive scalability supports faster AI workloads, reduces latency, and underpins broader AI tooling and services.

• User Experience: Improved reliability and performance potential for developers and users relying on AI-powered applications.

• Considerations: Requires massive capital investment, energy use, and coordination across multiple teams; potential public scrutiny.

• Purchase Recommendation: For organizations seeking robust AI infrastructure support, Google’s roadmap signals long-term commitment; assess alignment with budget and sustainability goals.

Product Specifications & Ratings¶

| Review Category | Performance Description | Rating |

|---|---|---|

| Design & Build | Enterprise-scale, interoperable infrastructure with aggressive capacity targets | ⭐⭐⭐⭐⭐ |

| Performance | Aiming for thousandfold capacity growth over five years to handle AI demand | ⭐⭐⭐⭐⭐ |

| User Experience | Expected improvements in reliability and throughput for AI workloads | ⭐⭐⭐⭐⭐ |

| Value for Money | High upfront and ongoing costs offset by near-term and long-term AI enablement | ⭐⭐⭐⭐⭐ |

| Overall Recommendation | Strong commitment to meeting AI demand; depends on organizational fit and strategy | ⭐⭐⭐⭐⭐ |

Overall Rating: ⭐⭐⭐⭐⭐ (4.9/5.0)

Product Overview¶

Google’s AI infrastructure chief recently outlined an audacious plan: to double capacity roughly every six months in order to meet surging AI demand, culminating in a thousandfold increase over a five-year horizon. The objective is clear: as AI workloads proliferate—from model training to real-time inference, data analytics, and AI-assisted services—Google’s centralized infrastructure must scale accordingly. The announcement underscores a broader industry trend where hyperscale cloud providers are signaling aggressive capacity expansion to support evolving AI tooling, models, and services that require substantial compute, storage, and networking capabilities.

This roadmap reflects a multi-pronged strategy. It includes expanding data center footprints, accelerating hardware refresh cycles (potentially including specialized accelerators, high-bandwidth interconnects, and memory architectures), and refining software environments to optimize scheduling, orchestration, cooling, and power efficiency. While the specifics of hardware generations or regional deployments were not exhaustively detailed in the briefing, the emphasis on sustained, exponential growth indicates a persistent push to remove throughput bottlenecks that can hamper AI workloads, especially as models scale and as demand for AI-as-a-service increases from enterprises, developers, and consumer applications alike.

For readers, the plan provides a rare glimpse into how one of the tech industry’s largest operators intends to balance the need for rapid AI experimentation with the discipline required to keep the core platform reliable, secure, and maintainable. It also frames a broader discussion about the capital intensity of AI infrastructure, the environmental considerations of massive compute farms, and the governance needed to deploy resources responsibly across multiple regions and product teams.

Contextually, the announcement sits in a broader trend of AI infrastructure race among cloud providers, hyperscalers, and enterprise private clouds. As competitive pressure grows, providers are not only investing in raw compute power but also in software platforms that optimize workloads, reduce energy use, and offer developers smoother paths to deploy, scale, and monetize AI applications. The implications reach beyond raw hardware: software tooling, data management, model serving, compliance, and resilience all factor into the long-term viability of such aggressive capacity expansion plans.

In terms of risk management, the plan raises questions about how Google will balance rapid growth with resource efficiency, supply chain stability, and geopolitical considerations that can influence where and how data and compute are provisioned. Industry observers will watch closely to see how Google’s internal governance structures and external transparency evolve as capacity scales, and how the company communicates milestones to customers and partners who rely on Google Cloud for mission-critical AI workloads.

Overall, the roadmap signals a strong commitment to enabling AI-driven innovation at scale, while also highlighting the complexity of delivering that capability responsibly. As AI models, tools, and services continue to mature, capacity expansion at the scale Google is pursuing will be a critical enabler or potential bottleneck, depending on execution, cost management, and the flexibility of the underlying software stack to adapt to changing AI workloads and developer needs.

In-Depth Review¶

Google’s public articulation of a capacity-doubling cadence every six months aims to prepare the company for a future where AI demand is both wide and deep. To understand the implications, it helps to examine the drivers behind the plan, the architectural considerations likely involved, and the operational challenges that accompany such scale.

1) Driving forces behind exponential capacity growth

– AI model complexity and scale: Modern AI workloads increasingly require large-scale training and inference across diverse models, from language and vision models to multimodal systems. Demand for real-time inference, batch processing, and AI-assisted applications continues to climb, driven by enterprise adoption, consumer services, and developer experimentation.

– Services and ecosystem growth: Beyond core model serving, ancillary AI services—such as data preprocessing, transformation, feature stores, model monitoring, and governance—raise the compute footprint. As customers build end-to-end AI pipelines, the need for reliable, low-latency access to compute becomes central.

– Developer and data collaboration: The need to provide developers with responsive environments, rapid iteration cycles, and scalable infrastructure for model development accelerates demand for capacity in both developer-facing and enterprise-scale contexts.

2) Architectural implications and likely components

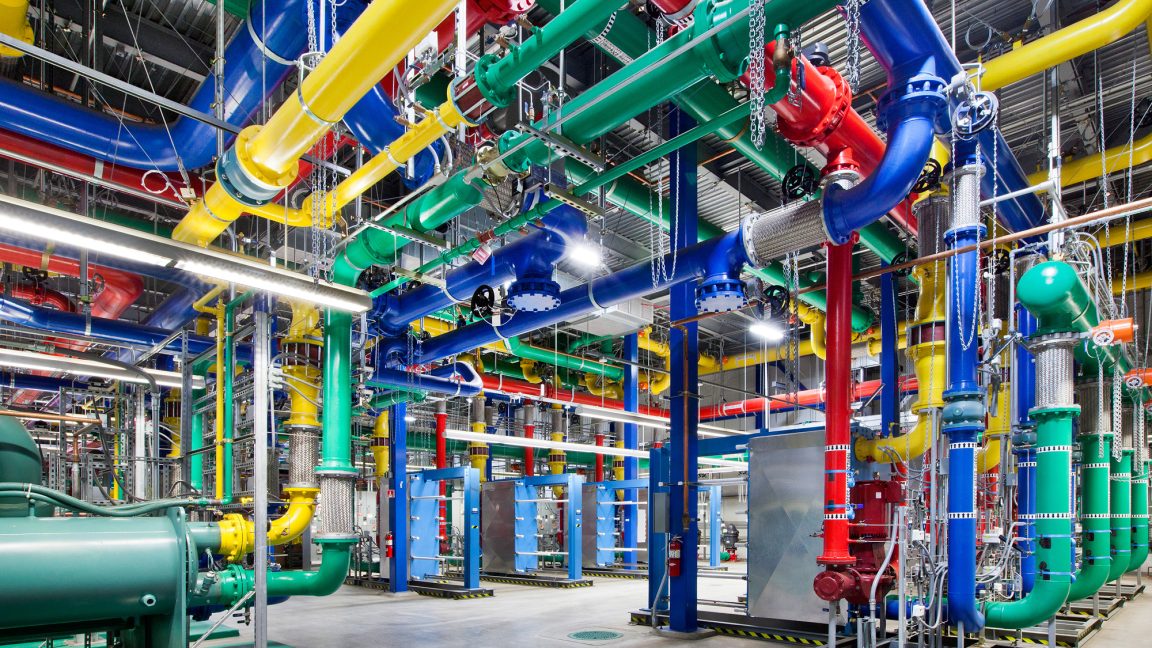

– Data center expansion and optimization: Achieving a 1000x increase over five years implies substantial expansion of physical facilities and an emphasis on energy efficiency. Innovations may include advanced cooling solutions, power distribution optimization, and modular data center designs that allow incremental capacity additions.

– Accelerators and hardware diversity: A sustained growth plan would likely involve a mix of accelerators (such as GPUs, TPUs, or custom AI accelerators) tailored to various workloads. Strategic selection of hardware—balancing performance, energy efficiency, and software compatibility—helps maximize throughput for diverse AI tasks.

– Networking and data throughput: High-bandwidth interconnects, fiber infrastructure, and efficient topology are critical to minimize latency and maximize data movement between storage, compute, and memory resources. Software-defined networking and optimized routing can further reduce choke points.

– Software stack and orchestration: Scalable cluster management, workload scheduling, and multi-tenant isolation are essential. Innovations in container orchestration, resource scheduling, and cost-aware deployment policies enable efficient utilization of vast compute resources.

– Data governance and compliance: As capacity expands, so does the complexity of data governance, privacy, and security. Systems for data lineage, access control, encryption at rest and in transit, and regulatory compliance become increasingly important.

3) Performance expectations and potential benefits

– Throughput and latency improvements: More capacity translates into lower queue times for model training and inference, reduced variability in response times, and the ability to support more concurrent AI tasks.

– Availability and resilience: A larger, more distributed infrastructure can improve fault tolerance and disaster recovery capabilities, contributing to higher service-level reliability for critical AI workloads.

– Developer enablement: With more resources, Google Cloud can offer customers broader experimentation windows, faster prototyping cycles, and the ability to deploy more ambitious AI applications without bottlenecks.

4) Economic and environmental considerations

– Capital intensity: Building and maintaining hundreds or thousands of exascale compute units requires substantial capital expenditure and ongoing operating expenses.

– Energy efficiency and costs: As compute scales, energy consumption becomes a primary concern. Investments in energy-efficient hardware, dynamic workload management, and potential on-site power generation or procurement strategies become central.

– Sustainability and public scrutiny: Large-scale data centers have environmental footprints that attract attention from regulators, customers, and the public. Transparent reporting and ongoing efficiency programs can help address these concerns.

5) Implications for customers and partners

– Predictable capability growth: Enterprises relying on Google Cloud for AI workloads may gain confidence from a clear plan to scale capacity, assuming the company maintains performance and reliability as it grows.

– Feature parity and integration: As capacity scales, Google can broaden support for AI services, tooling, and integrations—ranging from model hosting and lineage tracking to advanced governance features.

– Pricing considerations: The economics of AI at scale can be favorable if efficiency gains and utilization improve. However, customers will still weigh cost against the benefits of faster deployment and higher throughput.

6) Potential risks and mitigation

– Execution risk: Coordinating expansions across data centers, hardware procurement, software upgrades, and regional legal requirements poses substantial project-management challenges.

– Supply chain volatility: Access to advanced accelerators and components can be disrupted by geopolitical or market conditions. Diversified supplier strategies and long-term partnerships may mitigate risk.

– Security and compliance: Scaling a platform without compromising security requires robust identity management, encryption, and monitoring, plus compliance with regional data protection laws.

– Environmental impact: Even with efficiency gains, the energy demands of AI infrastructure are significant. Ongoing investments in renewables, energy reuse, and carbon accounting help address sustainability concerns.

*圖片來源:media_content*

7) The broader industry context

Google’s capacity-expansion plan aligns with a wider industry push from hyperscalers and cloud providers to meet AI demand. Competitors are pursuing similar trajectories, investing in data center footprints, specialized hardware, and software platforms to simplify AI deployment for customers. The competitive landscape incentivizes ongoing innovation, faster time to insight for users, and more robust infrastructure offerings. As AI models become more ubiquitous across sectors, the capacity to deploy, train, and operate these models at scale becomes a differentiator for cloud providers and platform vendors alike.

In summary, Google’s strategy to double capacity every six months—driving toward a thousandfold growth in five years—emphasizes the central role of infrastructure in enabling AI-driven ecosystems. If executed effectively, the plan could deliver faster, more reliable AI services, expanded developer capabilities, and a stronger platform for partners and customers to innovate. However, the approach carries substantial risks tied to cost, energy use, supply chain dynamics, and governance. Observers will closely monitor milestones, transparency around implementation, and how the company translates capacity growth into tangible benefits for users.

Real-World Experience¶

Implementing and operating AI infrastructure at scale is a multi-year endeavor involving cross-functional coordination among hardware teams, software engineers, data scientists, security professionals, and sustainability experts. While the public-facing component of Google’s plan highlights the ambition, the real-world experience rests in how the company translates this ambition into reliable, measurable outcomes that customers can rely on.

From a practical perspective, capacity expansion requires a phased approach. Initial stages often involve piloting new hardware and software stacks in select regions or data centers, with rigorous benchmarking to quantify gains in throughput, latency, and energy efficiency. Lessons learned from these pilots inform broader rollouts, including procurement strategies, facility design choices, and data governance policies.

In the deployment phase, orchestration tools and scheduling systems become critical to balance loads, prevent contention, and ensure fair resource distribution among different AI workloads. Security and compliance considerations need to be woven into every layer, from access controls to secure data channels and auditing capabilities. Monitoring and observability become even more important, given the scale; teams rely on dashboards, anomaly detection, and automated remediation to maintain service levels.

For developers and customers, the real-world impact lies in the predictability of service performance, the ability to scale AI workloads without drastic changes to code or architecture, and the availability of robust tooling for model serving, monitoring, and governance. Suppliers and partners benefit when new capacity enables them to run more complex experiments, deploy larger models, and deliver AI-powered products with shorter iteration cycles.

User-facing results—such as lower latency for AI inference, higher throughput for batch processing, and more consistent performance during peak load periods—depend on a tightly managed balance of hardware availability, software efficiency, and energy management. When capacity is added incrementally and thoughtfully, customers experience fewer regressions and can plan AI initiatives with greater confidence.

The experience of large-scale AI infrastructure projects also highlights the importance of sustainability. As compute footprints grow, so does energy consumption. Leading providers invest in efficient hardware, adopt renewable energy sourcing, and implement cooling innovations to reduce the environmental impact. Transparent reporting about energy use, emissions, and efficiency gains helps reassure customers and regulators that AI expansion is being pursued responsibly.

In practice, the path from ambitious capacity targets to measurable benefits for users involves disciplined project governance, clear milestone definitions, and continuous improvement. The most successful deployments are those that align capacity expansion with product roadmaps, customer needs, and a commitment to reliability and security.

Pros and Cons Analysis¶

Pros:

– Significantly expanded AI compute capacity can reduce bottlenecks for training and inference, enabling faster experimentation and deployment.

– Improved throughput and lower latency support more complex and diversified AI workloads.

– Enterprise customers gain confidence in Google’s ability to sustain AI infrastructure growth and service reliability.

Cons:

– Large-scale capacity growth requires substantial capital investment and ongoing operating costs.

– Environmental impact concerns necessitate continued focus on efficiency, renewables, and transparent reporting.

– Execution risk across data center construction, hardware procurement, and software stack integration can affect timelines and reliability.

Purchase Recommendation¶

For organizations planning to leverage AI at scale, Google’s declared trajectory signals a long-term commitment to building and maintaining a high-capacity AI infrastructure. If your strategy hinges on rapid experimentation, heavy model training, or large-scale AI service delivery, backing by a hyperscale platform that intends to expand capacity aggressively can be a compelling factor. However, prospective buyers should weigh several considerations:

- Alignment with budget and total cost of ownership: Substantial capital outlays and ongoing energy and maintenance costs must be accounted for in total cost planning.

- Sustainability and governance commitments: Evaluate Google’s approach to energy efficiency, renewable sourcing, and governance mechanisms as capacity grows.

- Integration and compatibility: Assess how the expanding capacity interoperates with your current tooling, data pipelines, security requirements, and compliance needs.

In the near term, customers should ask for concrete milestones, regional rollout plans, and detailed service level commitments tied to capacity expansion. Clear communication about expected performance improvements, potential price changes, and availability windows will help organizations plan with confidence. Overall, Google’s capacity-expansion plan represents a strategic bet on enabling AI-scale operations across its ecosystem. For customers, the decision to engage will depend on how well the plan aligns with their own AI roadmaps, risk tolerance, and sustainability priorities. If aligned, it could unlock new capabilities and larger-scale AI deployments that were previously impractical or inaccessible.

References¶

- Original Article – Source: feeds.arstechnica.com

- Supabase Documentation

- Deno Official Site

- Supabase Edge Functions

- React Documentation

*圖片來源:Unsplash*