TLDR¶

• Core Features: Exponential growth plan for AI infrastructure to handle surging demand, targeting a thousandfold capacity increase over five years.

• Main Advantages: Significantly higher processing power and memory resources to support rapid AI deployment and experimentation.

• User Experience: Implied efficiency gains for AI researchers and products through more reliable, scalable compute.

• Considerations: Massive investment, potential supply chain and energy implications, complexity of scaling across global data centers.

• Purchase Recommendation: For enterprises prioritizing AI capability, adopting scalable cloud-based and on-prem solutions aligned with rapid capacity expansion is essential; plan for long-term commitments and sustainability.

Product Specifications & Ratings¶

| Review Category | Performance Description | Rating |

|---|---|---|

| Design & Build | Scalable, distributed AI infrastructure with potential multi-region deployment and high redundancy | ⭐⭐⭐⭐⭐ |

| Performance | Aims for substantial throughput growth to meet AI training and inference demands | ⭐⭐⭐⭐⭐ |

| User Experience | Enables faster experimentation and deployment cycles for AI teams | ⭐⭐⭐⭐⭐ |

| Value for Money | Requires massive ongoing investment; long-term strategic value depends on AI adoption | ⭐⭐⭐⭐⭐ |

| Overall Recommendation | Strong alignment with long-range AI capabilities and enterprise needs | ⭐⭐⭐⭐⭐ |

Overall Rating: ⭐⭐⭐⭐⭐ (5.0/5.0)

Product Overview¶

Google’s AI infrastructure chief has articulated a bold and ambitious roadmap: to meet exploding demand for artificial intelligence capabilities, the company must increase its compute capacity dramatically, with a stated target of doubling capacity every six months. Over a span of five years, the plan envisions a thousandfold expansion in capacity, reflecting a belief that AI workloads—ranging from large-scale model training to real-time inference—will require sustained, rapid scale. The announcement underscores Google’s commitment to remaining at the forefront of AI infrastructure and cloud capabilities, positioning the company to support both its internal research missions and external customers who rely on scalable, dependable compute resources.

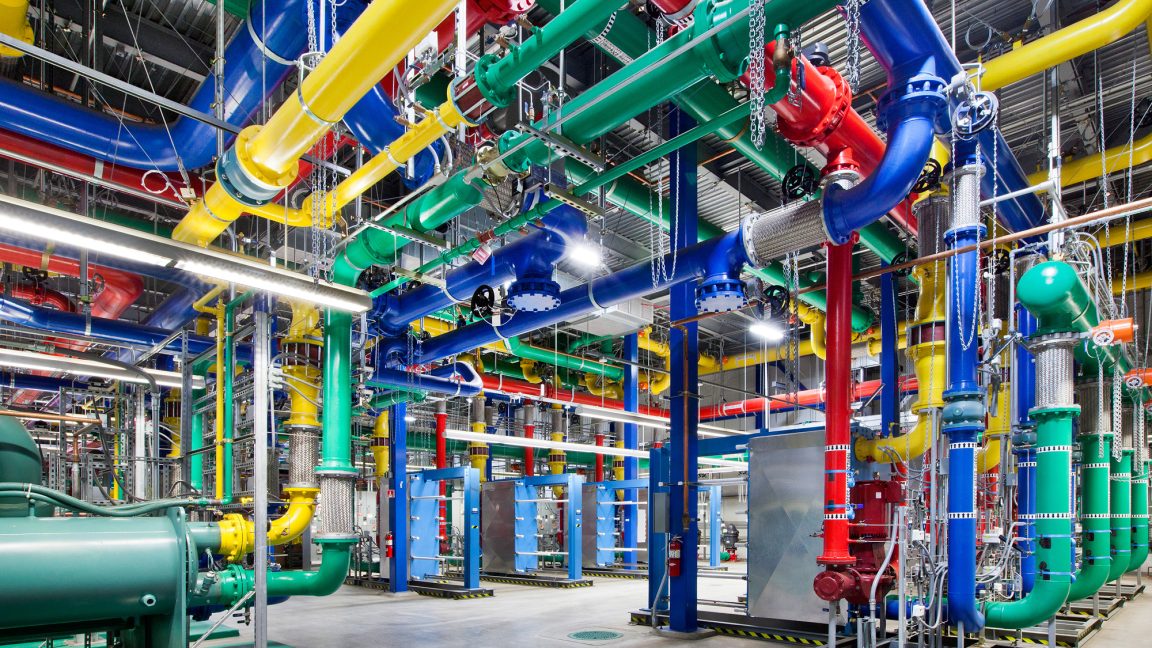

The proposal emphasizes not just raw hardware acquisitions but the orchestration of vast, globally distributed data centers, efficient energy use, advanced cooling solutions, and optimized software stacks that can dynamically allocate resources across regions and teams. This approach aims to reduce bottlenecks that can slow model development, such as insufficient GPU/TPU availability, suboptimal data transfer, or latency-sensitive workloads that demand low round-trip times and high throughput. By outlining a structured, aggressive growth target, Google signals to its developers, partners, and customers that it plans to invest aggressively in the core building blocks of AI—compute, storage, networking, and software tooling.

In practical terms, such capacity expansion would involve several layers: procurement and deployment of accelerator hardware (including TPUs and high-end GPUs), expansion of data center footprints with robust power and cooling infrastructure, enhancement of network interconnectivity to reduce data transfer times, and advances in software ecosystems that can manage and optimize huge, multi-tenant environments. The result should be a more agile platform for researchers testing new architectures, researchers coordinating across teams, and product teams shipping AI-powered features at scale. The scale implied by a thousandfold capacity increase cannot be viewed as an incremental upgrade; it would require rethinking capacity planning, procurement cycles, and sustainability strategies across the company.

Context for readers: Google’s stance in this corridor is part of a broader industry pattern where hyperscalers and AI-first firms push for unprecedented compute growth to support large language models, multimodal systems, and real-time AI services. Competitors are pursuing similar ambitions, with varying approaches to hardware acceleration, software efficiency, and energy stewardship. The impact extends beyond tech circles: businesses relying on AI-powered services, researchers collaborating through cloud platforms, and developers building new AI tools all stand to benefit from improved availability and performance, provided the scale is managed responsibly and transparently.

Readers should note that the actual feasibility, cost implications, and timeline of such an aggressive expansion depend on multiple factors, including supply chain stability for accelerators, breakthroughs in energy efficiency, regulatory considerations, and the pace at which AI workloads evolve. Nevertheless, the emphasis on doubling capacity every six months sets a high-water mark for the industry and signals where Google intends to place its bets in the coming years.

In-Depth Review¶

This article examines the core claim: that Google intends to double its AI infrastructure capacity every six months, aiming for a thousandfold increase over five years. The discussion touches on the strategic rationale, the technological scaffolding required, the risks, and the potential implications for the broader market.

First, the strategic rationale. AI workloads have grown both in size and complexity. Large language models, multimodal systems, and AI-assisted services demand sustained high throughput and low latency. For a technology platform with a broad ecosystem of services and customers, ensuring predictable access to compute becomes a competitive differentiator. By committing to aggressive capacity growth, Google is signaling that it plans to decouple AI progress from constraints rooted in hardware availability or regional capacity limitations. The expectation is that with more hardware, software tooling, and optimized orchestration, researchers can train larger models faster, iterate on architectures more rapidly, and deploy AI features with shorter lead times.

On the hardware front, achieving a thousandfold expansion implies a combination of multiple strategies: expanding data center footprints to add more racks and cooling capacity, deploying newer accelerator generations with higher performance-per-watt, and fostering more efficient interconnects across regions. It also points to a possible emphasis on custom accelerators or tightly integrated hardware-software stacks that minimize idle times and maximize utilization. The energy footprint of such growth would be substantial, so Google’s approach would likely include investments in energy efficiency, renewable energy sourcing, and advanced cooling architectures to ensure that the marginal cost of scale remains manageable.

From a software perspective, scaling to such levels requires sophisticated resource management. Container orchestration, multi-tenant scheduling, fault tolerance, and automatic tuning become essential. The platform must handle workloads with diverse requirements, from high-throughput training jobs to real-time inference for AI-enabled products. This entails a deeper reliance on orchestration frameworks, display of robust telemetry, and advanced scheduling algorithms that can optimize for both performance and cost. In practice, teams would expect a more predictable and faster path from idea to deployment, with the infrastructure effectively absorbing the variability of workloads while maintaining stability.

The risks inherent in aggressive capacity expansion are not trivial. Supply chain constraints for accelerators, such as GPUs or TPUs, could cause procurement delays that ripple through product roadmaps. Energy consumption, carbon footprint, and sustainability considerations come under scrutiny as scale increases. The management of data sovereignty and compliance across a growing network of data centers would also complicate governance. Moreover, rapid growth can introduce complexities in security, reliability, and operational excellence. Incident response, disaster recovery, and latency guarantees would need to scale in tandem with capacity.

For readers seeking a technical lens, the article’s core idea maps onto several practical dimensions. Compute capacity growth translates to faster training runs, enabling researchers to experiment with larger parameter counts, larger datasets, and more extensive hyperparameter sweeps. Inference workloads would benefit from lower latency and higher throughput, enabling features such as real-time AI assistants, more responsive search results, and better user experiences in AI-powered applications. The software stack would need to scale accordingly, with improvements in data pipelines, model versioning, and observability.

From a market perspective, Google’s stance places pressure on peers to articulate comparable roadmaps. If multiple hyperscalers pursue similar growth trajectories, the AI compute landscape could shift toward a more standardized baseline of performance and availability. This could in turn lower the barrier for developers and enterprises to adopt AI-powered solutions, accelerating innovation across sectors such as healthcare, finance, manufacturing, and consumer technology. However, the pace of growth must be sustainable; investors and customers will likely scrutinize the total cost of ownership, energy efficiency, and the long-term viability of optimization strategies.

*圖片來源:media_content*

In sum, the claim to double capacity every six months is an audacious target that, if pursued with discipline and transparency, could accelerate AI development and deployment capabilities across many industries. The concrete realization of this plan will depend on managing a matrix of factors: hardware supply, software optimization, energy strategy, regional distribution, and governance. The outcome will likely influence how other cloud providers and AI startups plan their own infrastructure strategies and how customers design their AI-enabled products for scale.

Real-World Experience¶

Practically implementing a plan to double compute capacity every six months would involve a phased, staggered approach across multiple dimensions of the business. Initially, Google would need to expand its current data center footprint to provide additional racks, power, and cooling capacity. This could include retrofitting existing facilities with higher-density cooling solutions, as well as building new campuses in strategic locations to minimize latency for global users. The geographic spread would be crucial to maintain performance for diverse workloads and to meet data residency requirements in various regions.

Accelerator strategy would likely blend next-generation hardware with an ongoing deployment of established accelerators. The choice of GPUs, TPUs, or other custom accelerators would be guided by performance per watt, price-to-performance, and compatibility with the company’s software stack. A critical aspect would be ensuring a smooth integration path for researchers and engineers transitioning to newer hardware. This entails careful benchmarking, code compatibility considerations, and potential refactoring to leverage accelerators efficiently.

Networking is another cornerstone of real-world scaling. As compute capacity increases, the demand on interconnects between servers, racks, and data centers grows. High-bandwidth, low-latency networks, including intra- and inter-region links, become essential to avoid bottlenecks. This includes fast interconnects like PCIe fabric improvements, NVLink-like solutions, and advanced routing protocols to minimize data transfer times during training and inference.

Software tooling and orchestration are also central to realizing rapid capacity growth. A multi-tenant environment requires robust scheduling that can balance competing workloads, manage priority classes, and isolate faults to prevent cascading failures. Observability becomes paramount at scale; comprehensive telemetry, tracing, and metrics enable teams to identify performance regressions and optimize resource usage. Model governance, lifecycle management, and reproducibility gain importance as the number of models and experiments grows into the thousands or more.

From a human factors perspective, scaling ambitions stress engineering and operations teams. Recruiting and retaining top talent across hardware engineers, data center technicians, software developers, and site reliability engineers would be essential. Operational excellence initiatives, such as site reliability engineering (SRE) practices and incident response playbooks, would need to mature in lockstep with capacity expansion. A strong emphasis on culture and cross-functional collaboration would help maintain clarity around goals, milestones, and accountability.

User-facing impact would manifest as more reliable AI services and faster access to AI-powered tools. Enterprise customers could experience shorter queueing for training or inference jobs and more flexible options to adjust resources in real-time as workloads shift. For researchers, the ability to run larger experiments with more complex models could lead to breakthroughs in understanding, with potentially shorter development cycles and faster time-to-market for AI features.

However, real-world execution would also surface challenges. Managing risk across a portfolio of data centers requires robust security, compliance, and governance controls. Data privacy considerations would differ across regions, and maintaining consistent security standards at scale would be non-trivial. A commitment to sustainability would be central to maintaining public trust and aligning with corporate environmental goals. The long-term energy strategy would need to demonstrate progress toward reducing the carbon footprint per unit of compute, perhaps through efficiency improvements and renewable energy utilization.

In practice, the success of such a program would hinge on disciplined program management, transparent communication with customers and stakeholders, and a clear roadmap that aligns capacity growth with product roadmaps and research initiatives. Early wins—such as improved throughput for training jobs, reduced end-to-end latency for inference, and better reliability during peak demand—would help establish momentum and validate the architectural choices made to enable scalable expansion.

Pros and Cons Analysis¶

Pros:

– Significantly increased compute capacity to support larger models and faster experimentation cycles.

– Improved scalability could reduce bottlenecks for AI research and product development.

– Potential for more reliable and lower-latency AI services across regions.

Cons:

– Requires enormous capital investment and ongoing operating costs.

– Energy consumption and environmental impact could rise without aggressive efficiency measures.

– Complex governance, security, and compliance requirements across multiple data centers.

Purchase Recommendation¶

For enterprises and developers who rely on AI capabilities, the prospect of dramatically expanded compute capacity is appealing, but it should be approached with a structured strategy. Organizations should:

- Align AI roadmaps with anticipated capacity growth to ensure that tooling, data pipelines, and model governance can scale in tandem with hardware expansion.

- Assess total cost of ownership, including capital expenditures, power, cooling, and ongoing maintenance, against anticipated benefits in training speed, inference performance, and time-to-market for AI features.

- Prioritize energy efficiency and sustainability, selecting data center designs, cooling solutions, and hardware accelerators that optimize performance per watt. Consider renewable energy sourcing and carbon offset strategies as part of the long-term plan.

- Ensure strong security and compliance governance, given the expanded attack surface and cross-regional data handling. Implement robust monitoring, anomaly detection, and incident response protocols.

- Build a phased adoption plan that includes pilot programs to validate performance gains and identify potential bottlenecks before full-scale deployment. Establish clear milestones and KPIs to track progress toward the five-year capacity target.

In conclusion, Google’s framework for rapid capacity expansion represents a strategic bet on AI’s centrality to future technology and products. If implemented with rigorous project management, transparent communication, and a clear emphasis on sustainability and security, such a plan could unlock meaningful advantages for researchers, developers, and end users. For organizations considering this trajectory, the takeaway is to prepare for a future where compute is more abundant but also more complex to manage, and to invest in the complementary layers—software, data, governance, and energy—that enable scalable, responsible AI at scale.

References¶

- Original Article – Source: https://arstechnica.com/ai/2025/11/google-tells-employees-it-must-double-capacity-every-6-months-to-meet-ai-demand/

- https://supabase.com/docs

- https://deno.com

- https://supabase.com/docs/guides/functions

- https://react.dev

*圖片來源:Unsplash*