TLDR¶

• Core Points: Photonic Ising machines demonstrate large-scale optimization without cryogenic cooling, building on early magnetism concepts.

• Main Content: A photonic system from McGill University shows scalable, room-temperature optimization inspired by Ising models, offering an alternative to quantum annealers for specific problems.

• Key Insights: Light-based hardware can leverage continuous-variable dynamics to tackle complex combinatorial tasks more accessibly than superconducting quantum devices.

• Considerations: Performance, scalability, and error mitigation must be compared against quantum and classical approaches; practical deployment remains evolving.

• Recommended Actions: Encourage cross-disciplinary research combining photonics, optimization theory, and machine learning; pursue benchmarking against established solvers.

Content Overview¶

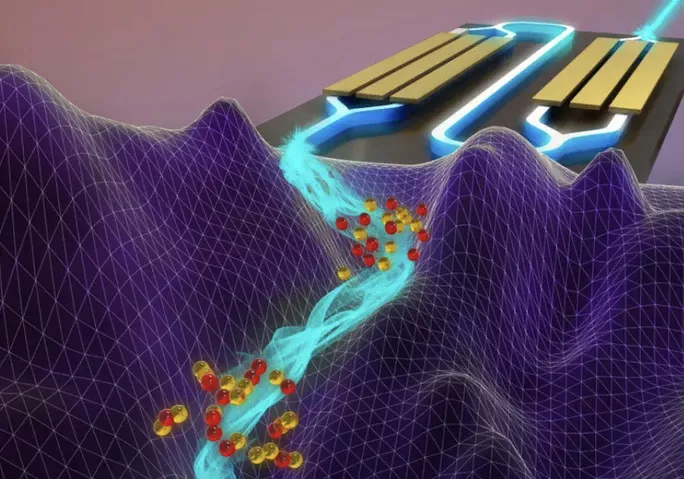

A recent study published in Nature marks a significant milestone in the field of unconventional computing: the first large-scale demonstration of a photonic Ising machine operating without the cryogenic constraints that typically accompany quantum computing systems. The device, developed by Bhavin J. Shastri and collaborators at McGill University, extends a concept with roots in early 20th-century magnetism and refines it into a scalable, room-temperature hardware platform. While quantum computing continues to attract attention for tackling certain classes of optimization problems, this photonic approach provides a potentially practical path to solve challenging optimization tasks with different hardware characteristics and at a different scale of accessibility. The following synthesis offers context, a deeper analysis of the technology, and reflections on its implications for the broader computational landscape.

The Ising model—a mathematical framework originally formulated to understand magnetic phase transitions—serves as the theoretical backbone for this hardware approach. In optimization, many hard problems can be mapped to finding the lowest-energy configuration of a system of interacting spins, an endeavor that can be recast as setting a network of local interactions to minimize a global objective function. Traditional electronic or quantum implementations face limitations in speed, energy efficiency, or cryogenic requirements. Photonic systems, by contrast, leverage light’s natural speed and robust, low-noise properties to encode and process information in a way that can emulate the spin interactions of the Ising model, enabling rapid exploration of feasible solutions.

The McGill work demonstrates a large-scale photonic network capable of solving such optimization problems without the need for ultra-cold environments. In this context, the term “photonic Ising machine” refers to a hardware platform where the dynamics of light within optical components are engineered to mimic the energy landscape of an Ising model. The researchers’ emphasis on scalability and operability at room temperature signals a meaningful shift from some quantum computing paradigms, which require cryogenic cooling to protect fragile quantum states.

Beyond the technical novelty, the study contributes to a growing ecosystem of alternative computing paradigms that aim to address optimization tasks that are intractable for traditional digital approaches or for current quantum devices within reasonable resource constraints. The reported demonstration aligns with a broader movement toward harnessing physics-inspired computation—where the physical processes themselves perform computational tasks—to pursue speedups, energy efficiency, and new practical capabilities in optimization, machine learning, and related fields.

To place this work in context, it is important to understand the landscape of optimization computing. Many NP-hard problems and combinatorial optimization tasks arise in logistics, scheduling, circuit design, materials discovery, network routing, and portfolio optimization, among other domains. These problems often require exploring an enormous space of potential configurations, a process that can be computationally expensive for conventional processors. Ising-model-inspired approaches—whether implemented in digital annealers, analog networks, quantum annealers, or photonic systems—seek to map the problem into a landscape where equilibrium configurations correspond to high-quality candidate solutions. The key questions researchers examine include: How effectively can a given platform navigate the energy landscape to avoid local minima? How robust is the solution quality in the presence of noise and imperfections? How scalable is the approach as problem size grows, and how does the computational cost compare to classical methods and quantum approaches?

The McGill photonic Ising machine’s ability to operate without cryogenic constraints also touches on practical considerations for deployment. Room-temperature operation reduces system complexity and maintenance requirements, potentially broadening accessibility for research groups and industry players who may not have extensive cryogenic infrastructure. The use of photonics, with its advantages in parallelism and high bandwidth, may enable large networks of interacting components that encode complex objective functions. At the same time, photonic systems must contend with fabrication tolerances, optical losses, and control precision, all of which influence solution quality and reliability.

In sum, the Nature-published study represents a meaningful addition to the portfolio of technologies pursuing efficient optimization through physical means. It does not claim universal supremacy over quantum computing or classical methods for all problems, but it demonstrates a compelling alternative for certain classes of optimization tasks, particularly those that can be naturally expressed in an Ising-like framework and benefit from the speed and room-temperature practicality of photonic hardware.

In-Depth Analysis¶

The core concept at the heart of this work is the Ising model, a mathematical abstraction used to study magnetic systems where spins interact with each other and with external fields. In optimization, the same mathematics can model a wide range of problems by assigning binary variables to decision choices and defining interaction terms that represent problem-specific constraints and objectives. The global optimum of the Ising energy function corresponds to an optimal or near-optimal solution for the original problem.

Photonic Ising machines translate this energy-minimization task into a physical system where light behaves analogously to spins and their couplings. The McGill team’s design builds on a lineage of approaches that leverage nonlinear optical processes, gain and loss competition, and network dynamics to create stable states that correspond to low-energy configurations. The key innovation here is achieving large-scale operation while maintaining room-temperature compatibility and avoiding the stringent cooling requirements that can accompany quantum hardware.

From a hardware perspective, several attributes distinguish this photonic platform:

– Photonic Interconnectivity: Light naturally supports high-bandwidth, low-latency interconnections, enabling dense networks of coupled nodes that can simulate complex Ising couplings. The architecture must ensure that the induced couplings and the global energy landscape accurately reflect the problem’s objective function.

– Analog Dynamics: Rather than stepping through discrete computational states with digital logic, the system relies on continuous-variable dynamics of optical fields. The analog nature allows rapid transitions and the potential for parallel exploration of many configurations, which can accelerate convergence to high-quality solutions.

– Noise and Robustness: Real-world hardware inevitably introduces imperfections. An attractive feature of many Ising-inspired photonic systems is their potential to operate in regimes where certain levels of noise do not derail, and may even aid, the search for global minima by enabling escape from local minima. The balance between deterministic dynamics and stochastic perturbations is a crucial design consideration.

– Scalability: Demonstrating that the network can be scaled to solve larger instances is essential for practical relevance. This entails not only increasing the number of interacting components but also maintaining manageable resource consumption (power, control complexity, fabrication yield) and preserving solution accuracy as the system grows.

In analyzing performance, researchers typically benchmark against established solvers and other unconventional computing platforms. Metrics include solution quality (how close the found solution is to the global optimum or best-known benchmarks), time to solution, energy efficiency, and resilience to noise and variability. Photonic Ising machines may show advantages in specific regimes, particularly for problems that can be naturally encoded into the network architecture and when extremely fast convergence to good solutions is valuable. However, there may be trade-offs in exactness, generality, and ease of programming compared with digital optimization algorithms on conventional CPUs/GPUs or specialized classical solvers.

Contextual comparisons are important. Quantum annealers, such as those employing superconducting qubits, also target Ising-model-like problems. They exploit quantum fluctuations to escape local minima and explore the solution space, but they require cryogenic operation and face challenges related to qubit coherence, calibration, and scaling. Classical heuristic methods—such as simulated annealing, parallel tempering, and advanced combinatorial optimization algorithms—have decades of development and remain extremely effective for many practical instances. The photonic Ising machine enters this landscape as a complementary approach: it can blend some benefits of analog parallelism and room-temperature operation with the problem-mapping flexibility of an Ising formulation.

Nevertheless, the long-term success of photonic Ising machines will hinge on several technical milestones. First, researchers must demonstrate robust accuracy across a broad spectrum of problem types, not just carefully curated benchmarks. Second, the platform must show scalable performance as problem size increases, including the ability to manage longer-range couplings and more complex constraint structures. Third, the engineering community will scrutinize energy efficiency, control overhead, and reliability in real-world settings. Finally, widespread adoption will depend on accessible programming models and interfaces that enable practitioners to translate real-world optimization problems into Ising formulations without prohibitive overhead.

The work by Shastri and colleagues is not a claim of quantum supremacy or a direct substitution for quantum computing. Rather, it presents a compelling demonstration of how photonics can be leveraged to realize a hardware approach for a class of optimization problems with potentially practical advantages. In the broader scientific and engineering community, this aligns with ongoing efforts to explore “physical computing” paradigms where physics-first implementations of computation can outperform traditional digital designs for specific tasks.

As this field evolves, several dimensions merit close attention:

– Benchmark diversity: A thorough comparison across multiple problem families—such as graph partitioning, max-cut, scheduling, and facility location—will help clarify the strengths and limitations of photonic Ising systems.

– Hybrid strategies: There is growing interest in hybrid architectures that combine photonic optimization engines with digital processors or machine learning components to improve input encoding, post-processing, and solution refinement.

– Error mitigation and calibration: Advances in precise fabrication, stable control of optical couplings, and compensation for losses will be essential for maintaining performance as the network scales.

– Cost and practicality: Beyond laboratory demonstrations, practical deployment requires evaluating capital costs, maintenance, and operational complexity relative to existing optimization toolchains.

*圖片來源:Unsplash*

Overall, the publication signals a progressive step toward harnessing light for solving challenging optimization tasks. It showcases how photonic technologies can be engineered to emulate the physics of the Ising model and suggests a potential avenue for robust, scalable optimization hardware that operates without the heavy infrastructure demanded by quantum systems. While this approach does not replace quantum computing or classical optimization in all contexts, it enriches the field by expanding the spectrum of viable physical substrates for problem-solving and by encouraging a diversifying ecosystem of alternative computing paradigms.

Perspectives and Impact¶

The implications of a room-temperature photonic Ising machine extend beyond the confines of a single research group or journal milestone. If further development confirms competitive performance across larger problem classes and with practical energy advantages, such systems could become valuable tools for industries where rapid, high-volume optimization is crucial. Examples include logistics optimization for supply chains, real-time traffic routing, dynamic resource allocation in data centers, and complex design optimization in engineering and materials science.

From a scientific perspective, the demonstration underscores the versatility of Ising-model-inspired computation. It demonstrates that a broad family of physical systems—ranging from superconducting qubits to memristive networks to photonic lattices—can be mapped to the same foundational optimization problem. This diversification may accelerate progress by enabling researchers to select hardware platforms aligned with specific problem characteristics, development timelines, and deployment constraints.

The room-temperature operation is particularly noteworthy. Cryogenic systems impose significant cost, complexity, and accessibility barriers. By removing that constraint, the photonic approach broadens the pool of potential users—academic labs, startups, and established industry players—who can experiment with and implement optimization hardware without specialized infrastructure. This democratization could spur more rapid iteration, benchmarking, and cross-pollination with fields such as optical communications, neuromorphic engineering, and machine learning.

However, several questions will guide future research. First, what are the ultimate limits of problem size and connectivity that a photonic Ising machine can practically handle? As networks grow, how do optical losses, phase stability, and fabrication variations influence performance? Second, how do these systems compare in total cost of ownership and maintenance when deployed at scale, compared with quantum accelerators and high-performance computing clusters using classical optimization methods? Third, can photonic Ising machines integrate with existing software ecosystems, enabling practitioners to model problems in familiar languages and workflows?

The broader optics and photonics community will also consider the implications for standards, interoperability, and reproducibility. Developing common interfaces, benchmark suites, and open-source toolchains will help ensure that results are comparable across laboratories and that improvements can be rapidly disseminated. Collaboration with theorists will be essential to refine mappings from real-world problems to Ising formulations that maximize the likelihood of obtaining high-quality solutions with photonic hardware.

In terms of policy and industry strategy, photonic optimization hardware could influence how organizations approach operational research and decision support. If capital and operating costs are favorable and the systems prove robust, there could be growing interest in deploying dedicated optimization accelerators as co-processors in data centers or as standalone optimization workhorses for specific workflows. This would complement, rather than replace, existing digital optimization software, potentially enabling more responsive planning and scheduling in dynamic environments.

From an educational vantage point, the emergence of photonic Ising machines offers an opportunity to enrich curricula in physics, electrical engineering, and computer science. Students can explore how continuous-variable photonics can realize complex combinatorial optimization tasks, bridging theory with hands-on hardware experiments. This interdisciplinary space can cultivate a workforce adept at translating mathematical optimization problems into physically implementable architectures.

The work’s broader significance lies in its demonstration that light, as a computational substrate, holds promise for tackling a class of optimization problems with practical, room-temperature practicality. It catalyzes ongoing conversations about the role of physics-inspired computing in the future landscape of computation, where different modalities—quantum, classical, and photonic—can be deployed in complementary ways to address the diverse demands of real-world problems.

Key Takeaways¶

Main Points:

– A large-scale photonic Ising machine from McGill University operates at room temperature, avoiding cryogenic requirements.

– The device leverages photonics to emulate Ising-model interactions, enabling rapid optimization for certain problems.

– This work represents a complementary approach to quantum computing and traditional classical solvers, expanding the landscape of optimization hardware.

Areas of Concern:

– Generalizability across diverse problem types and scales remains to be thoroughly demonstrated.

– Comparative benchmarks against mature classical methods and quantum approaches are essential to assess true practical advantage.

– Long-term reliability, cost, and integration with existing workflows require further elucidation.

Summary and Recommendations¶

The Nature publication describing a large-scale photonic Ising machine marks an important milestone in the broader quest to diversify computational substrates for optimization. By harnessing light to implement Ising-model dynamics at room temperature, the McGill team provides a compelling demonstration of how physics-inspired computation can address complex optimization tasks with potential advantages in speed and practicality. While it does not claim supremacy over quantum devices or classical solvers for all problem classes, it adds a valuable pathway to explore for problems naturally cast in an Ising framework and for applications where rapid, scalable optimization is paramount.

For researchers and practitioners, several strategic directions emerge:

– Expand benchmarking across a wider array of problem families to map strengths and limitations clearly.

– Investigate hybrid architectures that combine photonic Ising machines with digital processors or machine learning components for end-to-end optimization pipelines.

– Focus on engineering advances to improve scalability, control precision, and resilience to losses and fabrication variations.

– Pursue collaborative efforts to develop standard tools, benchmarks, and open interfaces that ease translation of real-world problems into Ising formulations.

If these avenues are pursued, photonic Ising machines could become a meaningful component in the spectrum of optimization technologies, offering a practical alternative or complement to quantum-inspired devices in specific contexts. The ongoing exploration of light-based computation reinforces the broader insight that hardware design, informed by the physics of the medium, can unlock new performance envelopes for tackling some of computing’s most challenging optimization problems.

References¶

- Original: https://www.techspot.com/news/111262-light-may-outshine-quantum-computing-toughest-optimization-problems.html

- [Add 2-3 relevant reference links based on article content]

*圖片來源:Unsplash*