TLDR¶

• Core Points: UCSD redesigns RRAM operation to accelerate neural networks, potentially enabling local AI applications amid remaining challenges.

• Main Content: Stacked memory approaches are being explored as alternatives to traditional RRAM performance gaps for neural network tasks.

• Key Insights: Advances focus on scalability and integration, but reliability, endurance, and fabrication complexity remain hurdles.

• Considerations: Practical deployment will require overcoming material and process challenges, plus robust software-hardware co-design.

• Recommended Actions: Track research progress, evaluate hybrid memory solutions, and prioritize applications with strong local processing needs.

Content Overview¶

Emerging memory technologies have long promised to accelerate artificial intelligence workloads by moving computation closer to memory storage, thus reducing data movement and energy use. Among these technologies, resistive random-access memory (RRAM) has drawn attention for its potential to implement in-memory computing for neural networks. However, despite years of research, RRAM has not yet delivered a robust, widely deployable solution for neural-network acceleration. In response, researchers at the University of California, San Diego (UCSD) are rethinking how RRAM operates to speed up neural network execution. By redesigning the material properties, device architecture, and circuit approaches, the UCSD team aims to close the performance gap and open up a path toward a new class of local AI applications. The central question driving this work is whether a reimagined RRAM paradigm—potentially paired with stacked memory architectures—can provide practical benefits for inference and training workloads in neural networks, while addressing the remaining technological and manufacturing challenges.

This article explores the motivations behind reshaping RRAM, the technical strategies being investigated, and the broader context in which stacked and non-volatile memory approaches are positioned within the AI hardware landscape. It also considers the implications for future AI deployment scenarios, potential timelines, and the kinds of applications that could benefit from memory-centric processing. The discussion remains grounded in the objective assessment of current capabilities, expected progress, and the critical obstacles that must be overcome before such technologies can be widely adopted in real-world systems.

In-Depth Analysis¶

Neural networks are computation-heavy, and a significant portion of energy and time in modern AI systems is spent moving data between memory and processing units. Traditional von Neumann architectures separate memory and compute, leading to bottlenecks as model sizes grow and real-time inference becomes more demanding. In-memory computing has been proposed as a solution, enabling computation to occur where data resides, thereby reducing latency and energy consumption. RRAM—an emerging non-volatile memory technology—has been studied extensively in this context due to its potential for dense storage, non-volatility, and compatibility with crossbar circuit structures that can perform matrix-vector multiplications efficiently.

Yet, despite its theoretical advantages, RRAM faces practical challenges. Device-to-device variability, limited endurance, resistance drift, and fabrication complexity have slowed the path to reliable, large-scale deployment. Moreover, achieving high-precision, stable weight updates for neural networks—especially for training tasks—has proven difficult with certain RRAM material systems. As a result, researchers have sought alternative approaches and design optimizations to salvage RRAM’s promise or to integrate it with stacked memory configurations that can bolster capacity and performance.

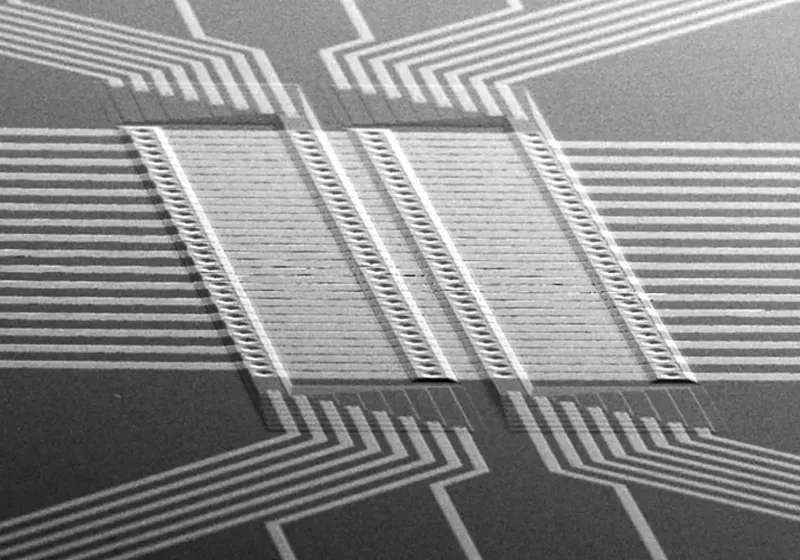

UCSD’s approach centers on redesigning how RRAM operates to accelerate neural network execution. While the original concept of RRAM as a direct replacement for conventional memory in neural accelerators faced hurdles, the team is exploring architectural and material strategies that could yield practical benefits. These efforts may involve rethinking the switching mechanisms, leveraging specific oxide or material stacks to reduce variability, and implementing circuit-level innovations that improve reliability and speed. In addition, the idea of stacking memory—assembling multiple layers of memory cells into three-dimensional configurations—could enhance density and throughput, potentially enabling more compact and capable AI accelerators.

A key consideration in this line of work is balancing performance with manufacturability. Stacked memory can offer high density and parallelism, which are attractive for neural network workloads. However, stacking introduces its own set of fabrication challenges, such as interlayer connectivity, thermal management, and process compatibility with existing semiconductor manufacturing lines. The UCSD researchers are likely exploring ways to mitigate these issues through materials science breakthroughs, novel device structures, and advanced packaging techniques. The overarching objective is to deliver a memory-centric platform that can support low-power, high-throughput neural network inference—and perhaps some limited training—without requiring a shift to entirely new hardware ecosystems.

The research must also contend with the broader ecosystem of AI hardware. Other memory technologies—such as conventional NAND flash, phase-change memory (PCM), and resistive switching devices beyond RRAM—are competing for the same space as possible accelerators. In some cases, researchers are exploring hybrids that combine memory with accelerators, or hybrid neuromorphic approaches that implement brain-inspired computations in memory-centric architectures. The UCSD work contributes to this broader context by re-evaluating the viability of RRAM within stacks and by identifying architectural patterns that could render it more practical for certain neural network tasks.

One of the central questions remains: what class of local AI applications could emerge if stacked memory or redesigned RRAM approaches prove viable? Local AI involves devices or edge nodes that perform inference or lightweight training without frequent cloud communication. Potential applications include real-time anomaly detection on industrial equipment, smart sensors and wearables with on-device personalization, autonomous robotics with on-board perception and decision-making, and other edge devices that require fast access to trained weights and rapid inference. If memory-centric processing can deliver the necessary throughput and energy efficiency in a compact form factor, it could enable a new wave of locally deployed AI capabilities that reduce latency, privacy risks, and reliance on remote data centers.

Looking ahead, the timeline for wide-scale deployment remains uncertain. The path to commercialization depends on solving material reliability issues, ensuring manufacturability at scale, achieving robust software-hardware integration, and building an ecosystem of design tools and validation methodologies. Researchers are typically pursuing incremental milestones: improving write endurance, reducing resistance variability, achieving predictable programming of weights, and demonstrating end-to-end improvements in specific neural network workloads. Even modest gains in latency or energy efficiency at the device or chip level can aggregate into meaningful benefits for data centers and edge devices, but the road to replacement of established memory and compute architectures is long and non-linear.

The UCSD effort represents one thread in a broader exploration of how memory technologies can be optimized for neural networks. It highlights the importance of rethinking both device physics and system-level integration to unlock potential gains from non-volatile memories. While the exact outcomes of this research remain to be seen, the direction underscores a growing interest in memory-centric computing as a pathway to more energy-efficient AI systems, particularly for workloads that can tolerate or benefit from near-storage computing paradigms. The results, if successful, could influence how future AI accelerators are designed, potentially favoring stacked memory architectures or hybrid configurations that blend non-volatile memory with traditional processing units.

In summary, UCSD’s redesigned approach to RRAM and its exploration of stacked memory architectures aim to accelerate neural network execution and enable local AI applications. The project reflects a pragmatic stance: pursue performance improvements while acknowledging and addressing the material, fabrication, and systems challenges that have limited RRAM’s impact to date. As researchers continue to test and validate these ideas, the AI hardware community will watch closely for demonstrations that can translate into tangible benefits for real-world neural network workloads.

Perspectives and Impact¶

The pursuit of in-memory computing for neural networks is driven by a simple premise: if computation can occur where data is stored, the energy and latency penalties associated with data movement can be dramatically reduced. RRAM, with its non-volatile properties and potential for dense crossbar arrays, has long been a promising candidate for this approach. UCSD’s recent focus on reimagining RRAM operation, coupled with the concept of stacked memory, represents a strategic evolution in this field. By addressing material-level challenges and system-level integration hurdles, the researchers aim to push RRAM-based accelerators from theoretical potential toward practical, deployable solutions.

*圖片來源:Unsplash*

One of the core implications of this line of work is the potential transformation of edge AI. Local AI applications, which require on-device inference with minimal latency and privacy-preserving data handling, stand to benefit from memory-centric designs that reduce the data traversing back and forth to centralized data centers. If stacked memory architectures can provide sufficient capacity and throughput while maintaining reliability, edge devices—from industrial sensors to consumer devices—could perform increasingly sophisticated AI tasks without relying heavily on cloud infrastructure. This would shift the balance of compute toward distributed intelligence, with implications for performance, privacy, and energy usage.

From a technology development perspective, the UCSD approach emphasizes the importance of co-design across device physics, circuit architecture, and software frameworks. Achieving practical gains requires an end-to-end pipeline where memory devices are not only capable of storing weights with low drift and high endurance but are also programmable in ways that suit neural-network algorithms and training regimes. This often calls for novel programming models, error mitigation strategies, and software tools that can map neural nets efficiently onto memory-centric hardware. The success of such efforts hinges on successful collaboration across disciplines, including materials science, electrical engineering, computer architecture, and machine learning.

At the same time, there are significant challenges that could temper optimism. Material variability and drift can degrade model accuracy over time, particularly in inference tasks where long-term stability is crucial. Endurance limits—the number of write cycles a memory cell can endure before failure—pose a risk for training workloads that require frequent weight updates. Thermal management becomes more complex in stacked configurations, where dense 3D memory can accumulate heat and impact performance and reliability. Manufacturing complexity and yield are additional practical constraints that must be addressed to achieve cost-effective production at scale. These factors collectively shape the feasibility and timing of any widespread adoption.

Despite these hurdles, the broader trend toward memory-centric AI hardware continues to gain momentum. Researchers are exploring diverse avenues: new material systems with improved stability, error-resilient network training techniques, adaptive precision schemes that reduce write stress, and hierarchical memory architectures that combine the strengths of different non-volatile memories. UCSD’s work on redesigning RRAM and leveraging stacked memory aligns with this trend by seeking to extract more performance from memory devices while maintaining a realistic view of the technological and manufacturing barriers. The potential payoff is a new tier of AI accelerators that can deliver competitive performance at lower energy costs for a subset of workloads, especially those that can tolerate architectural constraints or benefit from on-chip personalization.

Another dimension of impact concerns ecosystem readiness. For RRAM-based or stacked memory solutions to gain traction, there must be mature design tools, reliable manufacturing processes, standardized benchmarks, and a clear value proposition relative to competing technologies. The research community often measures progress not only in raw speed or energy metrics but also in reproducibility across labs, manufacturability at scale, and the ability to integrate with existing software stacks. As the UCSD project progresses, the emergence of demonstrators, reference designs, and industry partnerships will be crucial for translating laboratory breakthroughs into commercial opportunities.

In the longer term, if UCSD and allied researchers can deliver robust, scalable, and cost-effective memory-centric accelerators, several societal and economic impacts could follow. AI workloads will become more energy-efficient, enabling broader deployment of AI at the edge, potentially improving privacy and reducing dependence on data centers. This could catalyze new products and services, from smarter industrial automation to consumer devices with enhanced local intelligence. However, it could also influence strategic considerations around data sovereignty and the geographic distribution of compute resources. The balance between centralized cloud compute and distributed edge processing would continue to evolve as memory-centric solutions mature.

Ultimately, the success of redesigning RRAM and advancing stacked memory hinges on a confluence of breakthroughs across multiple domains and a clear demonstration of value in real workloads. As with many nascent technologies, early excitement gives way to careful validation as researchers grapple with practical constraints. The UCSD effort is a valuable contribution to the ongoing dialogue about how best to accelerate neural networks beyond the limitations of traditional architectures, and it sets the stage for future experiments, prototypes, and possibly new industry standards in AI hardware.

Key Takeaways¶

Main Points:

– UCSD is redesigning RRAM operation to accelerate neural network execution, aiming to enable local AI applications.

– Stacked memory architectures are being explored to improve density, throughput, and integration for memory-centric AI.

– Real-world deployment faces material variability, endurance, thermal, and manufacturing challenges that must be overcome.

Areas of Concern:

– Device-to-device variability and long-term reliability of RRAM remain critical hurdles.

– Endurance limits and resistance drift can affect accuracy and longevity of AI models.

– Manufacturing complexity and cost of stacked memory systems may impede scalable production.

Summary and Recommendations¶

The UCSD initiative reflects a pragmatic approach to advance memory-centered AI hardware by rethinking how RRAM operates and by leveraging stacked memory concepts. While RRAM has not yet delivered a matured solution for neural-network acceleration, the redesigned operating paradigms and three-dimensional memory configurations under investigation could address several of the most persistent obstacles—namely, scalability, energy efficiency, and data movement bottlenecks. The work remains contingent on resolving variability, endurance, thermal, and fabrication challenges that have historically slowed progress in RRAM-based accelerators.

For researchers and industry stakeholders, the prudent course is to pursue a diversified research portfolio that includes material innovations, device engineering, circuit-level optimizations, and software-hardware co-design strategies. Demonstrations should target concrete workloads that benefit most from memory-centric processing, with clear metrics for latency, energy, accuracy, and reliability. Collaboration across academia and industry, as well as the development of robust validation platforms and reference designs, will be essential to translate laboratory results into production-grade solutions.

If memory-centric solutions like redesigned RRAM and stacked memory prove viable, they could unlock a new class of local AI applications characterized by lower latency, reduced energy consumption, and enhanced privacy. Edge devices, industrial automation, and consumer electronics could increasingly perform sophisticated inference and lightweight on-device training without heavy dependence on cloud infrastructure. The broader impact would be the acceleration of on-device intelligence and a shift in how AI workloads are distributed across the data ecosystem. However, substantial engineering and manufacturing milestones lie ahead before such technologies can achieve widespread adoption.

References¶

- Original: https://www.techspot.com/news/111276-rram-hasnt-delivered-but-stacked-memory-pitched-run.html

- Additional context and related material on memory-centric AI and in-memory computing concepts:

- In-Memory Computing: Emergence of Non-Volatile Memory for AI Accelerators

- Review of RRAM Technology Challenges and Opportunities

- Stacked Memory Architectures for 3D-Integrated AI Accelerators

Note: The above references provide supplementary context related to RRAM, memory-centric computing, and stacked memories but were not cited directly in the original article.

*圖片來源:Unsplash*