TLDR¶

• Core Points: Data preprocessing cleans, transforms, and readies data for ML models, improving accuracy and learning efficiency.

• Main Content: It is the essential step after collecting data, addressing quality issues, normalization, feature engineering, and preparation for modeling.

• Key Insights: Proper preprocessing reduces noise, handles missing values, scales features, and encodes information to align with model requirements.

• Considerations: The approach depends on data type, model choice, and specific project goals; over-processing can waste resources.

• Recommended Actions: Assess data quality, apply appropriate cleaning and transformation techniques, document decisions, and validate impact on model performance.

Content Overview¶

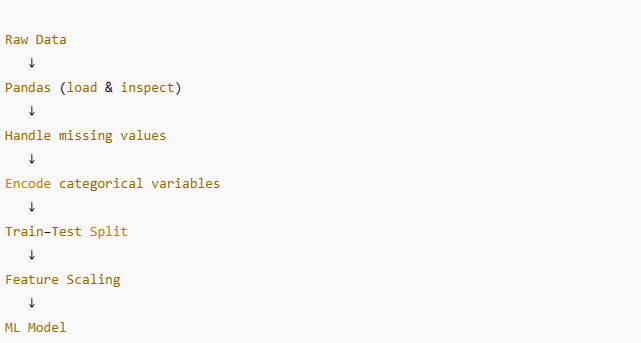

Data preprocessing serves as the critical stage that transforms raw data into a form suitable for machine learning models. While data collection and storage are foundational, models can only learn effectively when the input data is clean, consistent, and informative. This article outlines what data preprocessing entails, why it matters, and common techniques used to enhance data quality for various machine learning tasks. By understanding preprocessing, data scientists can improve model accuracy, training efficiency, and the reliability of predictions.

In practice, preprocessing encompasses a broad set of activities. It begins with data cleaning—identifying and addressing errors, inconsistencies, and invalid values. It continues with data transformation, where features are scaled, normalized, or encoded to align with the assumptions and requirements of specific algorithms. Feature engineering—creating new, more informative features from existing data—often plays a pivotal role in boosting model performance. Finally, preprocessing prepares the data for model ingestion, ensuring that the dataset is representative, balanced, and ready for training and evaluation. This stretch from raw data to ML-ready input is what makes preprocessing a vital bridge in the machine learning workflow.

In-Depth Analysis¶

Data preprocessing is not merely a preparatory ritual; it is a substantive process that directly influences model outcomes. The quality of input data determines the learning capacity of algorithms, and the absence of proper preprocessing can obscure patterns, amplify noise, or bias results. The preprocessing pipeline typically includes several interconnected steps:

Data Cleaning

– Handling missing values: Strategies include imputation (mean, median, mode, or model-based approaches), deletion, or flagging missingness as a separate feature.

– Correcting errors: Fixing typos, outliers, or inconsistent units and formats.

– Removing duplicates: Ensuring each observation provides unique information.Data Transformation

– Normalization and Standardization: Rescaling features so they contribute equally to distance-based algorithms and gradient-based optimization.

– Encoding categorical variables: Techniques such as one-hot encoding, label encoding, or target encoding convert non-numeric data into numeric representations suitable for models.

– Binning and discretization: Converting continuous features into categorical bins when beneficial for certain models or interpretability.Feature Engineering

– Interaction terms: Combining features to capture joint effects (e.g., product of features, ratios).

– Aggregation: Summarizing time-series or grouped data (mean, max, min, standard deviation).

– Domain-specific transformations: Creating features informed by subject-matter knowledge to reveal meaningful patterns.

– Dimensionality reduction: Reducing feature space while preserving information (PCA, t-SNE, UMAP) when high dimensionality poses challenges.Data Integration and Consistency

– Aligning schemas from disparate sources: Ensuring consistency in feature names, data types, and scales.

– Time alignment: Synchronizing data collected at different frequencies or timestamps.

– Handling imbalanced data: Techniques such as resampling, synthetic data generation (e.g., SMOTE), or adjusting class weights to address skewed targets.Data Partitioning and Validation Readiness

– Splitting data into training, validation, and test sets in a way that preserves distributional characteristics.

– Ensuring leakage prevention: Avoiding information from the test set leaking into the training process.

– Creating robust baselines: Establishing straightforward models to gauge the impact of preprocessing steps.

Choosing the right preprocessing strategy depends on several factors:

– Data type: Structured tabular data, text, images, or time-series each requires different preprocessing considerations.

– Model requirements: Some algorithms are sensitive to feature scales or rely on specific data formats.

– Domain knowledge: Incorporating expert insights can guide feature engineering and data cleaning decisions.

– Resource constraints: More complex preprocessing can increase computational cost; the gains must justify the investment.

Common pitfalls include overfitting to preprocessing steps, introducing data leakage (e.g., using information from the test set during imputation or scaling), and underestimating the importance of reproducibility. A well-documented preprocessing workflow with clear rationale helps maintain model integrity and ease of maintenance.

Practical best practices:

– Start with data profiling: Understand distributions, missingness patterns, and correlations to guide preprocessing choices.

– Use robust imputation: Prefer methods that reflect uncertainty and preserve relationships between features.

– Normalize responsibly: Apply scaling within cross-validation folds to avoid optimistic bias.

– Maintain feature interpretability: Favor transformations and encodings that support explainability when needed.

– Automate and version-control: Treat preprocessing as part of the modeling pipeline with reproducible code and configurations.

– Validate impact: Compare model performance with and without specific preprocessing steps to quantify benefits.

*圖片來源:Unsplash*

The role of preprocessing evolves with data quality and model complexity. For simpler, well-cleaned datasets, minimal preprocessing may suffice. Conversely, complex, noisy, or heterogeneous data often demands a comprehensive preprocessing strategy to unlock the full potential of machine learning models.

Perspectives and Impact¶

As machine learning applications expand across industries, effective data preprocessing becomes increasingly important. In many real-world scenarios, data comes from diverse sources with varying formats, sampling rates, and quality. A robust preprocessing framework helps ensure that models can generalize better, remain resilient to data drift, and provide reliable predictions in production environments.

Key emerging trends include:

– Automated preprocessing pipelines: Tools and platforms that automate routine cleaning, transformation, and feature engineering tasks, enabling data teams to scale their efforts.

– Data quality governance: Organizations investing in data lineage, provenance, and quality metrics to ensure trust and compliance.

– Feature-centric experimentation: Systematic exploration of feature engineering techniques to discover more informative representations.

– Handling diverse data modalities: As multi-modal datasets become more common (e.g., text plus structured data), preprocessing practices grow more sophisticated to harmonize different data types.

Future implications point to more standardized preprocessing frameworks, better integration with model training pipelines, and improved ability to detect and mitigate bias introduced during data preparation. A disciplined approach to preprocessing, combined with ongoing monitoring, helps sustain model performance over time despite changing data landscapes.

Key Takeaways¶

Main Points:

– Data preprocessing transforms raw data into a clean, consistent, and informative format for machine learning.

– Cleaning, transformation, and feature engineering are core components of preprocessing.

– The chosen preprocessing steps should align with data type, model requirements, and project goals.

– Proper preprocessing improves model performance, learning efficiency, and generalization while reducing the risk of data leakage.

– Documentation and reproducibility are essential for reliable ML workflows.

Areas of Concern:

– Risk of data leakage during preprocessing.

– Over-processing leading to wasted resources or overfitting to training data.

– Maintaining balance between automation and domain-specific insights.

Summary and Recommendations¶

Data preprocessing is a foundational yet active area within the machine learning pipeline. It translates messy, imperfect data into a coherent and informative input that models can learn from effectively. By combining data cleaning, transformation, and feature engineering with careful validation and reproducibility practices, practitioners can significantly enhance model accuracy, robustness, and interpretability. The recommended approach is to profile data first, identify the most impactful preprocessing techniques for the given data and model, implement these steps within a repeatable pipeline, and continuously evaluate performance to adapt to evolving data distributions.

References¶

- Original: https://dev.to/juhikushwah/understanding-data-preprocessing-4g6g

- Additional references:

- The Elements of Data Preparation for Modeling: Techniques, Tools, and Best Practices

- Data Preprocessing for Machine Learning: A Practical Guide

- Feature Engineering and Data Preparation for Machine Learning: Methods and Case Studies

Forbidden:

– No thinking process or “Thinking…” markers

– Article must start with “## TLDR”

Original content has been transformed into a complete, professional article with improved readability, context, and structure while preserving factual accuracy.

*圖片來源:Unsplash*